Thanks to John Schwenkler for the invitation to guest-blog this week about my new book Surfing Uncertainty: Prediction, Action, and the Embodied Mind (Oxford University Press NY, 2016).

***

From Rag-bags to (Unified) Riches?

Is the human brain just a rag-bag of different tricks and stratagems, slowly accumulated over evolutionary time? For many years, I thought the answer to this question was most probably ‘yes’. Sure, brains were fantastic organs for adaptive success. But the idea that there might be just a few core principles whose operation lay at the heart of much neural processing was not one that had made it on to my personal hit-list. Seminal work on Artificial Neural Networks had, of course, opened many theoretical and practical doors. But the cumulative upshot was not (and is not) a unifying vision of the brain so much as a plethora of cool engineering solutions to specific problems and puzzles.

Meantime, the sciences of the mind (and especially robotics) have been looking increasingly outwards, making huge strides in understanding how bodily form, action, and the canny use of environmental structures were co-operating with neural processes. That was a step in a very promising direction. But without a satisfying picture of the role of the biological brain, ‘embodied cognition’ was (I fear) never going to look very much like a systematic, principled science.

Ever the optimist, I think we may now be glimpsing the shape of just such a science. It will be a science that will take many cues from an emerging vision of the brain as a multi-layer probabilistic prediction machine. In these posts, I want to run over some of the core territory that makes this vision such a good fit – or so I claim – with the agenda of embodied cognitive science, take a look at some far horizons concerning conscious experience, and review some potential trouble-spots.

First though, a mega-rapid recap of the basic story, and then a worry about how best to describe it.

The Strange Architecture of Predictive Processing

In a 2012 paper the AI pioneer Patrick Winston wrote about the puzzling architecture of the brain – an architecture in which “Everything is all mixed up, with information flowing bottom to top and top to bottom and sideways too.” Adding that “ It is a strange architecture about which we are nearly clueless”.

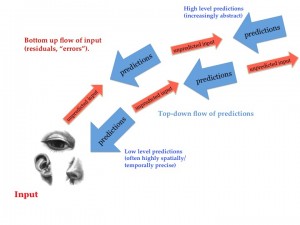

It is a strange architecture indeed. But that state of clueless-ness is mostly past. A wide variety of work – now spanning neuroscience, psychology, robotics and artificial intelligence – is converging on the idea that one key role of that downward-flowing influence is to enable higher-levels to attempt (level-by-level, and as part of a multi-area cascade) to try to predict lower-level activity and response. That predictive cascade leads all the way to the sensory peripheries, so that the guiding task becomes the ongoing prediction of our own evolving flows of sensory stimulation. The idea that the brain is (at least in part, and at least sometimes) acting as some form of prediction engine has a long history, stretching from early work on perception all the way to recent work in ‘deep learning’.

In Surfing Uncertainty I focus on one promising subset of such work: the emerging family of approaches that I call “predictive processing”. ‘Predictive processing’ plausibly represents the last and most radical step in the long retreat from a passive, feed-forward, input-dominated view of the flow of neural processing. According to this emerging class of models biological brains are constantly active, trying to predict the streams of sensory stimulation before they arrive. Systems like that are most strongly impacted by sensed deviations from their predicted states. It is these deviations from predicted states (prediction errors) that now bear much of the information-processing burden, informing us of what is salient and newsworthy within the dense sensory barrage. When you see that steaming coffee-cup on the desk in front of you, your perceptual experience reflects the multi-level neural guess that best reduces visual prediction errors. To visually perceive the scene, if this story is on track, your brain attempts to predict the scene, allowing the ensuing error (mismatch) signals to refine its guessing until a kind of equilibrium is achieved.

Learning in Bootstrap Heaven, and Other Benefits

To appreciate the benefits, first consider learning. Suppose you want to predict the next word in a sentence. You would be helped by a knowledge of grammar. But one way to learn a surprising amount of grammar, as work on large-corpus machine learning clearly demonstrates, is to try repeatedly to predict the next work in a sentence, adjusting your future responses in the light of past patterns. You can thus use the prediction task to bootstrap your way to the world-knowledge that you can later use to perform apt prediction.

Importantly, multi-level prediction machinery then delivers a multi-scale grip on the worldly sources of structure in the sensory signal. In such architectures, higher levels learn to specialize in predicting events and states of affairs that are – in an intuitive sense – built up from the kinds of features and properties (such as lines, shapes, and edges) targeted by lower levels. But all that lower-level response is now modulated, moment-by-moment, by top-down predictions. This helps make sense of recent work showing that top-down effects (expectation and context) impact processing even in early visual processing areas such as V1. Recent work in cognitive neuroscience has begun to suggest some of the detailed ways in which biological brains might implement such multi-level prediction machines.

Perception of this stripe involves a kind of understanding too. To perceive the hockey game, using multi-level prediction machinery, is already to be able to predict distinctive patterns as the play unfolds. The more you know about the game and the teams, the better those predictions will be. Perception here phases seamlessly into understanding. What we quite literally see, as we watch a game, is constantly informed and structured by what we know and what we are thus already busy (consciously and non-consciously) expecting.

This, as has recently been pointed out in a New York Times piece by Lisa Feldman Barrett, has real social and political implications. You might really seem to start to see your beloved but recently deceased pet enter the room, when the curtain moves in just the right way. The police officer might likewise really seem to start to see the outline of a gun in the hands of the unarmed, cellphone-wielding suspect. In such cases, the full swathe of good sensory evidence should soon turn the tables – but that might be too late for the unwitting suspect.

On the brighter side, a system that has learnt to predict and expect its own evolving flows of sensory activity in this way is one that is already positioned to imagine its world. For the self-same prediction machinery can also be run ‘offline’, generating the kinds of neuronal activity that would be expected (predicted) in some imaginary situation. The same apparatus, more deliberately seeded and run, may enable us to try out problem solutions in our mind’s eye, thus suggesting a bridge between offline prediction and more advanced (‘simulation-based’) forms of reasoning.

Thinking about perception as tied intimately to multi-level prediction is also delivering new ways to think about the emergence of delusions, hallucinations, and psychoses, as well as the effects of various drugs, and the distinctive profiles of non-neurotypical (for example, autistic) agents. In such cases, the delicate balances between top-down prediction and the use of incoming sensory evidence may be disturbed. As a result, our grip on the world loosens or alters in remarkable ways.

But….

But there is a problem, or at least, a potential hiccup. These ways of pitching the story, though perfectly correct as far as they go, can sometimes give a rather misleading impression. They can give the impression that the brain is in the business of searching for the hypothesis that best explains the sensory data. And that in turn (unless it is heard very carefully indeed) can make it sound as if brains are organs whose guiding rationale is representational – as if they are restless organs forever seeking to find the picture of the world that best accommodates the sensory evidence, given what they already know about the world.

The trouble with this way of pitching the story is pretty evident – at least from the standpoint of a more embodied approach to the mind. It makes action play second fiddle to something like representational fidelity. But a moment’s reflection ought to convince us that it is action – not perception – that real-world systems really need to get right. Perceiving a structured scene is adaptively pointless unless it enables you to do something better, or to avoid doing something bad. And that, in an often hostile, time-pressured world means that fidelity needs to be traded against speed. Indeed, even if you remove the time-pressure, it makes adaptive sense to devote as little energy as you can get away with to encoding a picture of the world. Because all that really matters (for any adaptive purpose that I can think of) is what you do, not what you see or perceive.

This poses an interesting puzzle. For predictive processing stories do seem to make perception (via the reduction of sensory prediction error) paramount. Even the accompanying accounts of action (next post) make action depend on the reduction of a select subset of sensory prediction error.

Fortunately, this tension is merely apparent. Properly understood, predictive processing is all about efficiently translating energetic stimulation into action. In the next post I’ll try to sketch that part of the story, and thus motivate a slightly different way of talking about these strange architectures.

Hello, Andy!

What a fascinating project! Quick question, which may or may not tie into your tantalizing preview of the next post. It seems that minimizing prediction error can involve modifications anywhere in the stream – from the lowest input layers, to the most abstract prediction generating expectations. Do you have any thoughts on this? It seems if all that matters is opportunistically minimizing prediction errors, there may be cases where directly modifying sensory areas in ways that make them generate information more in line with abstract expectations might be more efficient than modifying abstract assumptions that generate those predictions. In fact, this might correspond to the intuitive distinction between directions of fit, often used, e.g., to identify differences in speech acts. Sometimes we want to track the world, in which case prediction errors should issue in modifications to assumptions about the world. But sometimes we want the world (especially our own bodies) to track our abstract assumptions (in such cases a better word is “intentions”), in which case prediction errors should issue in modifications of relevant parts of the world (usually ones over which we have a kind of direct control, like our bodies). I take it that there may be cases where both kids of prediction error correction are taking place simultaneously, and we get a kind of equilibration. Perhaps something like this goes on in skill learning. E.g., when you’re learning to change from a F Maj 7 to a Bb Maj 7 on the guitar, and you screw up, the prediction error does not lead you to revise your abstract assumption about what that change sounds or looks like, but rather to revise lower level input generating factors under your motor control, like finger position. So we have an attempt to reduce prediction error, but not via changes to abstract expectations about sensory input, but by directly acting on the actual sensory input itself, such that it better conforms to expectations that are held fixed.

Hey Tad – great to hear from you. Your point about two ways to cancel prediction errors is spot on, and is the heart of the PP story about action, which I’ll sketch mega-briefly tomorrow. The idea is that in action you cancel errors by bringing into being the flow of proprioceptive information your brain predicts. So yes – two ways to cancel error: alter your thinking (broadly speaking) or alter the world. But much more generally, I think we can think about intentions and desires in roughly this way too, as involving predictions that entrain actions that aim to bring them about – as self-fulfilling prophecies – when all goes well. I also think (though not sure my little run of blog posts will get us all there) that we do lots of work by structuring the world in ways that make it easier for our predictions to come out right, or that allow simpler predictions to bring about more complex results (e.g. when we paint white lines on a road and then correct errors with regard to the lines to drive around the cliff-top road). Part of the attraction of all this stuff for me is that I see it as a heavenly fit with embodied cognition. Not everyone agrees. ☺

Perhaps this is coming up, but I’d be very interested in seeing discussion of the differences between your and Jacob Hohwy’s take on the predictive error minimization hypothesis. The model appears to be compatible with both internalist- and externalist/embodied-leaning interpretations. What evidence (especially empirical) would weigh on one side or other other? It seems a lot will turn on the nature of the information available at each level or step (including what modifications it can or cannot undergo — all of this temporally indexed), about which we don’t know much. (yet).

Great question/issue. I’ve learnt a huge amount from Jakob’s seminal and ground-breaking treatments of this stuff. But in the end, he’s a staunch internalist and I’m a fuzzy bleeding-out-into-the world kind of guy. I have a long treatment of the debate between us coming out in Nous and can happily pass you a copy. Tomorrow’s post does sketch the core of it. Your question about empirical evidence is especially important, and somewhat vexing. Vexing because I can’t quite tell whether there will be any! The issue looks to be cosmetic from the point of view of the math – though there may be some traction to be gained by thinking about multiple Markov blankets and the ongoing construction and reconstruction of the most functionally salient boundaries of sensing and action.

Thanks, Andy — and yes I would love to see the forthcoming paper.

If anyone wants to revisit Jakob Hohwy’s posts from his visit as a featured scholar in 2014, here they are: https://philosophyofbrains.com/category/featured-scholar-jakob-hohwy

A central concern of cognitive philosophy is how to understand the relationship between perceiving, thinking, intentional humans (and animals, and potentially robots) and the underlying physical mechanisms of flesh and blood and brain (and potentially silicon). Most people recognise that the cartoon version of a Cartesian theatre — where the scene of a house in front of a person is mediated via light into the retina and through nerve circuits so as to project a representation of the house in an internal cinema where Homunculus Horace is watching — does not do the job. Just removing the cognition mystery into an internal part of the mechanism, Horace, does not explain.

There have been many attempts to disguise the crassness of this cartoon version, including trading on ambiguities of the term ‘representation’. These things go in fashions, and it looks like the latest version of this fashion is Horace the predictor. Two flavours: one in which Horace is a ‘higher-level’ of the brain attempting to predict lower-level activity and response; another flavour in which Horace is the brain as a whole “trying to predict the streams of sensory stimulations before they arrive”. Now I can understand the claim that Horace is predicting something if Horace is a person, or dog, or even a reasonably competent robot (the Google self-driving car that applies the brakes when a person steps into the road). It means considering them as an intentional agent, a person or near-enough with interests in the world around it — and not treating them as just a cold impersonal mechanism. So in this everyday sense of prediction, such brain explanations are just a new version of Horace the Homunculus.

Conceivably there is a different sense of prediction that might be intended, one that allows us to make sense of Andy’s claims without accusing him of the usual homuncular fallacy. The ambiguity of ‘representation’ (interpreted relationally or non-relationally) carries over to ‘prediction’: a fresh footprint in the mud is capable of being taken as evidence of someone recently passing by, and also capable of being taken as predictive of the foot’s owner being currently off in the direction indicated. So one might claim that the footprint is predictive, regardless of whether Sherlock Holmes, or Horace, is observing it and actually formulating these predictions. But from what I can see, Andy is indeed appealing to Horace very explicitly, and he would find it difficult to reformulate his position so as to avoid this.

If Andy is making the claim “X is predicting” (where X could be a higher-level of the brain, or the brain-as-a-whole — or an unknown black box found on Mars) and he means it in some sense that does not require “X is a homunculus like Horace”, what is an operational test for deciding the truth or falsehood of such a claim? What weight is being placed on this word ‘prediction’?

Hi Inman,

Thanks for dropping by! I totally agree that we need to make sure any story about ‘predictive brains’ is fully and workably mechanistic. By that I mean just that the story can be implemented without mysterious black boxes that do anything like understanding. But as far as I can tell, nothing in the PP model contravenes that important requirement. For example, the idea of one ‘level’ predicting activity at the level below and then sending forward residual error requires only an automatic comparison and response operation, defined ultimately over physical states and events. Indeed, it had better be (in some sense) ‘automatic all the way down’ – after all, we are ultimately just physical stuff doing what it does.

There’s another layer of worry expressed (or at least hinted at) in your note though. That is the worry that any representational interpretation or account of how these systems work threatens to commit some kind of homuncular fallacy. There, I don’t agree. As always, I think that rich dynamical stories (of which PP is a prime example) sometimes merit a representational gloss, and that the PP stories make most sense when we depict the system as instantiating a generative model that issues predictions that are compared to actual states to deliver residual error. Subtract that picture and all we have is an image of richly recurrent (and mysteriously asymmetric) dynamics.

But prediction, as it occurs in the PP story, requires absolutely no content-understanding on the part of the parts of the brain involved. So maybe it is a weak enough sense to avoid your worry?

Hi Andy,

Thanks for clarifying that your usage of ‘prediction’ “requires absolutely no content-understanding on the part of the parts of the brain involved.” My problem is that in all your writings I have seen so far, you do not deliver a definition that delivers that — you always in practice implicitly appeal to some Horace Homunculus that is content-understanding.

Hence my request, as yet unanswered, for some operational test for whether a mechanism X is or is not ‘predicting’ in your sense (predicting_AC). Given a mechanism, or wiring diagram for one, that has inputs and outputs and some internal state — what is the set of tests anyone can apply to decide whether that mechanism (or some part thereof) is predicting_AC something-or-other? To focus the mind, the mechanism might be (a) a coke machine that delivers a can of coke for every 2 coins input or (b) a room-thermostat + central-heating controller or (c) a C elegans nervous system or (d) a sunflower (that overnight turns to where the sun is due to rise) or (e) anything else — but all labels that might suggest functions and hence content-understanding have been removed.

<> But I haven’t yet seen this sense defined operationally by you without appeal to Horace. You suggest a definition ‘defined ultimately over physical states and events’ — but then predicate this on being able to provide a label ‘error’. But a non-content-ascribing diagram of a mechanism does not come with parts labelled ‘error’ — this is just one blatant example of where Horace enters your picture. Can you provide an operational definition or test here that requires no such content-understanding, no such labels — or point to where you have previously provided it?

Best wishes

Inman

Hi Inman,

I’m still somewhat puzzled about exactly what the issue is here. You say:

“But a non-content-ascribing diagram of a mechanism does not come with parts labelled ‘error’ — this is just one blatant example of where Horace [the homunculus] enters your picture.”

But to label a flow of information as a prediction error signal is not to posit a homunculus. It is to describe a certain kind of functional role. When the systems engineers at a nuclear power plant label parts of a control circuit as a forward model of the plant dynamics, and describe some signals as conveying information, within that system, about departures from the predicted state, they are not positing potent mysterious homunculi. They are describing the functional role of those signals (relative to keeping the plant within determined bounds). This is all that PP requires. The reason this ought not raise the spectre of Horace the homunculus is that all the operations involved can all be implemented by the right arrangements of matter, obeying nothing more than the laws of physics. This, I submit, will also prove to be the case with the kinds of circuitry posited by PP.

Notice that nothing here demands content-understanding on the part of the component states or processes. For the plant to respond to an error signal, it need not understand anything about the signal at all. That seems right to me – any decent story needs to have understanding as a product, not an unanalysed cause. Nor is that such an impossible task – ever since Turing, we have known that physical systems can be set up so as automatically to respect semantic constraints even though none of their parts understands anything at all.

I suspect that your real challenge may be rather different. You are asking for an ‘operational definition’ of how to tell whether signal X in some dynamical system is error, prediction, or neither of the above. And you would be right if you suspected that nothing about the (local) signal itself determines this. But that is not because that status is hallucinated by the lazy theorist. Rather, it is because that status is just a shorthand description of a certain systemic functional role. The operational definition you are seeking thus amounts to nothing less than the full PP story – accompanied by some specific implementional proposal that would enable you to start to look for stuff that fills those functional roles in the brain. I think the PP story is just reaching that level of specification. See e.g. the paper by Bastos et al called “Canonical microcircuits for predictive coding”.

Hi Andy,

So a clear indicator of one difference between us is that you can say “But to label a flow of information as a prediction error signal is not to posit a homunculus. It is to describe a certain kind of functional role.” — whereas for me that is exactly what I mean by positing a homunculus. It means choosing a form of description that *is* content-ascribing. Are we just arguing about semantics? — I think not.

You claimed that “prediction, as it occurs in the PP story, requires absolutely no content-understanding on the part of the parts of the brain involved.” But you have twice failed to respond to my request for an operational definition for prediction_AC, other than to now suggest that it requires buying into “the full PP story — accompanied by some specific implementational proposal … that fills those functional roles in the brain”.

With respect, this is vacuous. Given any arbitrary mechanism of any kind, I am fully confident of both your ability and my ability to invent a story that ascribes functional roles to parts and labels some internal state as ‘error’ or ‘prediction’ or ‘hope’. So this does not distinguish between mechanisms that *do* use prediction_AC and those that do not. Unless you provide the operational definition that I still seek: in your terms, how do we distinguish between a hallucinated prediction_AC and a real one? Prediction is so central to your theory that you really do have an obligation to define what you mean by it!!

Maybe I am missing the point. Maybe you are not presenting the PP story as a claim as to some specific way in which brains work, in contrast to other possible ways they might have worked; but rather you are merely pointing out the obvious truth that we humans are capable of describing brains in functional terms, ascribing roles to different parts — we are good at story-telling. But if you are being more specific, please provide the operational criteria.

Best wishes

Inman

Hi Andy (again!),

My Hypothesis A from the start has been that your unwillingness to define what you mean by prediction is because you rely on presenting a PP story in which a part of the brain (or perhaps the whole brain) plays some functional role in which the reader is invited to view it as a cognitive being, indeed a homunculus; and in that context it immediately makes sense for such a homunculus to perceive inputs as error signals, or predictive of something, those words fit naturally into such a narrative which *requires* content-ascribing and content-understanding.

You persistently deny that your theory depends on such a metaphor; hence the offer of alternative Hypothesis B in which you need to define prediction_AC without such cognitive assignments to internal states or to parts of the brain. You say “any decent story needs to have understanding as a product, not an unanalysed cause” which requires you to define prediction without relying on some unanalysed sense of understanding. But your repeated failures to flesh out Hypothesis B just reinforce my belief in A.

Inman

Hi Andy,

Thanks for clarifying that your usage of ‘prediction’ “requires absolutely no content-understanding on the part of the parts of the brain involved.” My problem is that in all your writings I have seen so far, you do not deliver a definition that delivers that — you always in practice implicitly appeal to some Horace Homunculus that is content-understanding.

Hence my request, as yet unanswered, for some operational definition of whether a mechanism X is or is not ‘predicting’ in your sense (predicting_AC). Given a mechanism, or wiring diagram for one, that has inputs and outputs and some internal state — what is the set of tests anyone can apply to decide whether that mechanism (or some part thereof) is predicting_AC something-or-other? To focus the mind, the mechanism might be (a) a coke machine that delivers a can of coke for every 2 coins input or (b) a room-thermostat + central-heating controller or (c) a C elegans nervous system or (d) a sunflower (that overnight turns to where the sun is due to rise) or (e) anything else — but all labels that might suggest functions and hence content-understanding have been removed.

—- previous posting had this quote removed by formatting —-

But prediction, as it occurs in the PP story, requires absolutely no content-understanding on the part of the parts of the brain involved. So maybe it is a weak enough sense to avoid your worry?

—- end of quote that is now reinstated —-

But I haven’t yet seen this sense defined operationally by you without appeal to Horace. You suggest a definition ‘defined ultimately over physical states and events’ — but then predicate this on being able to provide a label ‘error’. But a non-content-ascribing diagram of a mechanism does not come with parts labelled ‘error’ — this is just one blatant example of where Horace enters your picture. Can you provide an operational definition here that requires no such content-understanding, no such labels — or point to where you have previously provided it?

Dear Inman,

(This reply is actually to your Dec 16, 2.49 post…for some reason, it lacks a Reply button…devious strategy that!)

I think we can now see where we disagree. You think it makes no sense to depict something as a prediction or as an error signal unless there is something (over and above the external theorist) that understands it as a prediction or error signal. I think that there is a pattern of production and use within a system that can justify such talk without requiring that the parts of the systems that trade in the signals understand them as predictions, or as errors, or as anything at all.

You say ‘define that pattern of production and use’. I say ‘read the literature on predictive processing’. That is not meant as a blunt parry. Rather, my claim is that the patterns involved are multiple, delicate, interwoven, yet distinctive. Neuroscientists and other cognitive scientists attracted by the stories work extremely hard to devise experiments and model-comparisons to search for those patterns. There are numerous examples in the book, and I mention one of them in my post-2 reply to Kiverstein and Bruineberg. I’ve included you in that reply also, as some themes seem to be converging there.

Hi Andy – seems to me the right response to Inman is to give him a naturalistic theory of content for predictive processing, yielding objective contents for predictions and prediction errors that don’t rely on either scientists’ interpretations or some homunculus that understands. Your functional role response might get us defining conditions for some abstract predictive role, but maybe not contents… and it sounds like Inman is worried about both.

Would you be OK with some kind of teleosemantics for the contents? Or maybe you don’t think the contents are objective? If the PP story isn’t trying to explain anything like folk psychological contents, the “contents” of predictions and errors could be purely informational, or even instrumental. I’m curious what you think about this.

Brilliant series of posts, BTW. I can’t wait to read the book!

Dear Dan,

Great to hear from you. That’s a really helpful thought. I now think there are really two issues in my exchanges with Inman.

One is – can we motivate, as ‘external theorists’, various content-involving descriptions of the flow of information in a PP system. Looks like we all agree that that can be done.

The other is – can we offer some additional argument to show that these really are flows of content-bearing signals, rather than the content-bearingness being a ‘mere’ theorists description.

But notice that I think that to be a real flow of content-bearing signals does not require that the system or any part of it understand them as such (so no homunular fallacy). But then Inman (I think) thinks they can’t be ‘real’ contents at all. I disagree. I think their functional role can establish that, assuming some kind of niche or adaptive backdrop.

Thus, I think the Rao and Ballard net, up and running in a system that needs to see to survive, would already be trading in real top-down content-laden predictions. Maybe a rich teleo-semantics can show why this is so. But that’s not a problem I’ve been concerned with myself. Other philosophers are way better equipped than me to explore that territory. Hence, I suspect, the tendency of Inman to think I am dodging his question – which I may be, depending on which question it is.

That all seems right to me too. A good project would be to take the Rao & Ballard net (and/or other PP models) along with some real biology, and carefully apply teleosemantic principles. I suspect some additional etiological detail will be required to avoid serious indeterminacies, though. (But would it matter if these contents were seriously indeterminate? Maybe not if we’re not attempting to match folk contents at all.)

The SINBAD story, or some other way of restricting template types (and therefore admissible contents) in a model building machine, could give us good reason to assign nicely determinate contents to a biological PP system. Something that might also happen in applying teleosemantic principles is that the “rather alien” contents that appear in PP theorists’ descriptions may prove to be not so alien after all. Which would be nice from the traditional philosopher of mind’s perspective, who hopes to find out what ordinary folk psychological states are, if they exist.

Meanwhile, the RRPP folks can continue to try to work out contentless descriptions of the mechanism, but if there are objective teleosemantic reasons to attribute contents, it seems to me they would be doing a lot of work for nothing.

Dear Dan,

See latest reply in the Inman saga below!

Andy

Hi Andy,

Yes, you correctly characterise the differences between us, and confirm my suspicions. The differences run deep, into what is deemed acceptable in philosophical debate. I was taught that it is unacceptable not to try and define one’s terms; and if they are initially fuzzy, one should work towards clarifying them in ways that do not depend on unverifiable intuitions.

Dan Ryder:- “seems to me the right response to Inman is to give him a naturalistic theory of content for predictive processing, yielding objective contents for predictions and prediction errors that don’t rely on either scientists’ interpretations or some homunculus that understands.” — yes, that is what you are unable — not merely unwilling — to provide. If you wanted to try, I would point you to Van Gelder on the Watt Governor for some of the difficulties involved, that I know you are aware of; but you don’t seem even to want to try.

You seem happy with having an undefined central concept of your theory, prediction, that is normally a cognitive term but in your theory you insist is used in a sense that doesn’t involve content-ascribing or content-understanding (by the scientist or the homunculus). There is no operational test for it, the recognition of it depends on intuitions (apparently untainted by the cognitive everyday sense of the word) derived from ‘reading the literature’.

This sounds like religion to me: if you have the right intuitions/Faith, and read the literature/Book, you will recognise the presence of Prediction/the Holy Ghost through ‘multiple, delicate, interwoven, yet distinctive’ patterns — don’t worry about definitions. Such a stance is both indefensible and unassailable. Great example of the Donald Trump strategy used in philosophy — affirm and reaffirm what others consider outrageously unacceptable in such a way as to close down the possibility of further rational debate!

It’s a successful strategy — I shall say no more!

Best wishes

Inman

Hi Inman,

Trump – harrumph, yuck…!

But I’m still puzzled! There is a perfectly clear technical sense of prediction and PE that lies at the root of all this. It seems boring to reiterate it but here goes.

Predictions are issued by a generative model that has learnt a probability distribution. In PP, a prediction of activity at some lower level is a top-down signal that can be compared to the actual activity at the lower level. When there is a mismatch, an error signal (PE) is sent forward that reflects the degree of the mismatch. That signal is weighted according to a further estimate (precision).

Nothing in this scenario requires the system to understand the meaning of any of the signals involved. And engineers can build such systems without the aid of magicians. In the case of engineered learning systems, we can go on to ask what knowledge is being used in the prediction task – by, for example, clamping some higher level units on and running the system backwards to discover what the input would have to be for that higher level to display that activity pattern. For a look at that kind of procedure, see Hinton’s old IJCAI paper “What Kind of a Graphical Model is the Brain?”.

Obviously, you know all this as well as I do. So I conclude that your worry is really not about the kind of functional role associated with prediction and PE. Rather, you are worried that predictions need contents, and you have always been sceptical about the idea that brains (or any other physical systems smaller than persons) trade in contents. That’s a legitimate and interesting worry. But I don’t see the value of pursuing it by asking for a definition of prediction and PE that simultaneously solves the sub-personal content-ascription problem. These are separate issues.

Hi Andy,

Thanks for that — took a struggle to get it out of you, but at last we can see whether you do have a definition of prediction that doesn’t involve content-ascribing or content-understanding (by the scientist or the homunculus). And you don’t.

Your definition is a version of the same content-bearing definition that all the rest of us use. Look at your language, cognitive terms such as: model, signal, error, reflects, estimate. These all imply content. When you say ‘X is a signal’ this means we can identify a sender and receiver and a referent, this is content-ascribing. When you say ‘X is a red light’ or ‘X is a voltage of 5v’ this is non-content-ascribing. A red light may act as a stop signal, or indeed as signalling the port side of a ship; same red light but different signals because conveying different content. The identical spike train may occur at different points A and B in the brain (or indeed at the same place at different times), signalling a prediction with one occurrence and signalling an error with the other. The difference is because the content ascribed is different.

Your definition referred to a model-of-a-brain, as functionally divided and neatly labelled with ascriptions on the theorist’s blackboard — not to the kilo of grey brain tissue in someone’s skull (that does not come with labels). We may slide between one sense and the other when we use the word brain, but care is needed.

The rest of us are happy to think of predictions as content-ascribing, neuroscientists and roboticists use similar ideas all the time in their models. Often no problems at all for those pursuits, and I (as a sometime brain evolver and some sort of engineer) have been happy to talk about (parts of) brains trading in contents — though I am careful to clarify that I am using this as part of a homuncular metaphor that is often useful and sometimes seriously harmful. Ask a neuroscientist or a roboticist whether a spike train or a pattern of activation is a signal conveying content and chances are they will say, yes, in my model it is conveying that specific content — which may eg be a prediction (here) or an error (there). They need not worry about Horace too much.

But a philosopher is doing a different job; your chosen route so as to to avoid being accused of the crass Cartesian theatre fallacy with Horace Homunculus was to insist that you could provide a definition of prediction that was non content-ascribing, and you haven’t. I don’t myself use the term ‘naturalising’ since I find it used ambiguously — but let’s clarify Dan Ryder’s suggestion of the need for ‘a naturalistic theory of content for predictive processing’ to say that such a theory proposing the non-content-ascribing prediction you seek would need to be built in principle on a foundation of physical, non-content-ascribing terms (descriptions of the kilo of brain tissue in mechanics, dynamics, voltages, etc etc…) and not cognitive, content-ascribing terms (signals, errors, etc) as used in theorists’ models that are begging the philosophical question.

Inman

Dear Inman,

Cool. We are getting closer and closer to at least understanding each other’s points of view!

You are right that ultimately the notion of prediction requires that the predictions have contents. I am not for one moment meaning to defend a view of ‘predictions without content’ – for then all that is left (as I myself argued in one of the posts) are complex dynamics.

What I do think, however, is that we can see (from small-scale simulated systems such as the Rao and Ballard model of early visual processing) that a certain pattern of exchange of top-down and bottom-up signals can implement such a contentful-prediction-involving process (without magic). We can also see what such a process delivers – in that case, the learning of extra-classical receptive fields that track typical statistics in the domain. We can also see how such learning enabled a system to deal with noisy data, fill in gaps, etc.

If we then have cause to think that (1) brains could, and perhaps even do, display that pattern, and (2) that the kind of functionality delivered would be adaptively valuable, it becomes worth asking the question of nature – are brains, at least sometimes, issuing cascades of top-down prediction that play that kind of functional role?

That’s where all the bodies of experiment and theory I was pointing to in our previous exchanges come in. One of the goals of those experiments is to determine what kinds of content are indeed being computed, and where, and when. I don’t think that it will be easy (again, for reasons mentioned in one of the posts) to determine this, as the contents, though real, may sometimes be rather alien to our standard ways of thinking about the world. But unless there turn out to be such contents, what we have is a dynamical story that does not require or merit the ‘prediction engine’ gloss.

Maybe we can agree on that much at least?

Andy

Hi Andy,

Fine. So we now agree that the notion of prediction requires contents.

The suggestion to the contrary only arose in response to my first post:

“A central concern of cognitive philosophy is how to understand the relationship between perceiving, thinking, intentional humans (and animals, and potentially robots) and the underlying physical mechanisms of flesh and blood and brain (and potentially silicon). Most people recognise that the cartoon version of a Cartesian theatre — where the scene of a house in front of a person is mediated via light into the retina and through nerve circuits so as to project a representation of the house in an internal cinema where Homunculus Horace is watching — does not do the job. Just removing the cognition mystery into an internal part of the mechanism, Horace, does not explain. …

… There have been many attempts to disguise the crassness of this cartoon version, including trading on ambiguities of the term ‘representation’. These things go in fashions, and it looks like the latest version of this fashion is Horace the predictor. … …”

— the non-content-ascribing version of prediction was one proposed bypass to this conundrum, a bypass you now acknowledge to be a dead end. Do you have an alternative route around to suggest, or do you accept this cartoon is an accurate picture of your approach?

https://upload.wikimedia.org/wikipedia/commons/thumb/7/74/Cartesian_Theater.svg/2000px-Cartesian_Theater.svg.png

You may prefer a bit of brain tissue to be sitting on the chair inside the head to something so obviously homuncular, but the same philosophical issue arises whatever is sitting inside doing ‘seeing a fried egg/predicting/signalling’.

In your separate response to Dan, you accurately characterise where our differences lie in “…can we offer some additional argument to show that these really are flows of content-bearing signals, rather than the content-bearingness being a ‘mere’ theorists description.

But notice that I think that to be a real flow of content-bearing signals does not require that the system or any part of it understand them as such (so no homuncular fallacy). But then Inman (I think) thinks they can’t be ‘real’ contents at all. I disagree. I think their functional role can establish that, assuming some kind of niche or adaptive backdrop.”

*You think* such such content-bearing signals to be *real* (without appeal to a homunculus) — yet cannot provide any operational test that does not make such a homuncular appeal. Whereas *I think* that such content-bearing signals only make sense in the context of a homuncular metaphor that will often be *useful* (in a heuristic engineering sense); and will sometimes be *dangerous* or *stupid* (see fried egg example). Asking whether a metaphor is true/false shows a misunderstanding of how metaphors work, the criterion is useful/misleading.

The cartoon takes the sort of metaphor that proves useful in many circumstances to neuroscientists and roboticists trying to figure out, in functional terms, how parts of brains work — positing a homunculus passing signals often has great explanatory power, and positing a homunculus *predicting* may well be very useful (perhaps cf Rao and Ballard, some cybernetics, etc)! But the cartoon then nicely illustrates where such a metaphor breaks down, since clearly it should not be taken seriously as any answer to the big philosophical questions alluded to above (eg cannot explain how the human perceives signals/predicts/sees fried eggs). For you, however, who insists the presence/absence of a prediction in some brain tissue is a matter of *fact* and not *usefulness*, you cannot smile at the cartoon as I do — it highlights a problem you have that is fatal to many of your readers’ expectations as to what your prediction-based approach might deliver.

“Maybe we can agree on that much at least?” — Either you find a non-homunculus-positing test for when something is content (specifically: predictive content) or you don’t — agreed on that at least!

Are you accepting bets on this (here’s my $10 on don’t) with a mutually acceptable procedure for establishing who wins? Will a test for whether a person/homunculus has a prediction be the same or different from your non-homunculus-positing test as to whether brain tissue has (or instantiates?) a prediction? If the same, will it satisfactorily assess ‘Inman predicts he will win’; if different, how would we treat a case where one test assesses Inman (or the brain tissue) as predictive and the other test disagrees? I will have lots of questions when/if you finally reveal the criteria to the public, at least $10 worth of fun. And so long as you are merely expressing Faith in the Existence of the Unseen Criterion, it sounds more like religion than philosophy to me.

Inman

Dear Dan and Inman,

(A joint reply, for reasons that should become clear…)

Dan – That sounds like a good (teleosemantic) avenue to pursue.

One caveat. You say that “Meanwhile, the RRPP folks can continue to try to work out contentless descriptions of the mechanism, but if there are objective teleosemantic reasons to attribute contents, it seems to me they would be doing a lot of work for nothing”.

Here, my exchanges with Inman give me pause. For Inman – perhaps unlike Jelle and Julian (?) – seems to allow that we can have good reasons to attribute contents, while still refusing to ascribe those contents to the systemic flows of information as such. Rather, he thinks they even when such ascriptions are well-motivated they reflect our own explanatory choices rather than describing what is ‘really’ going on. Thus Inman writes that he is often enough “happy to think of predictions as content-ascribing” adding that “neuroscientists and roboticists use similar ideas all the time in their models. Often no problems at all for those pursuits, and I (as a sometime brain evolver and some sort of engineer) have been happy to talk about (parts of) brains trading in contents”. But then he stresses that he is always (and again I quote) “careful to clarify that I am using this as part of a homuncular metaphor that is often useful and sometimes seriously harmful. Ask a neuroscientist or a roboticist whether a spike train or a pattern of activation is a signal conveying content and chances are they will say, yes, in my model it is conveying that specific content — which may eg be a prediction (here) or an error (there). They need not worry about Horace [the homunculus] too much.

But a philosopher is doing a different job…”

Inman: here’s the rub. I don’t think I am doing a different job. Certainly, I never meant to offer a philosophical theory of content. To be honest, I’m not really convinced that we need one. What we do need (and have) are useful systemic understandings that treat some aspects of downwards influence as predicting the states of certain registers, and some aspects of upwards influence as signalling error with respect to those predictions. Insofar as those models prove useful, and can be mapped onto neural activity, I am happy (and I think warranted) to speak of predictions and prediction error signals in the brain. I certainly don’t think that some bits of the brain experience themselves as dealing in errors while other bits experience themselves as dealing in predictions. That would be homuncular indeed. But once such madness is avoided, I’m not sure what, if anything, can be at issue between us.

Hi Andy,

“I’m not sure what, if anything, can be at issue between us.” — I despair at your inability to see this. I feel I am pointing at the elephant in the room and you are so busy looking at my finger you are blind to the elephant.

I’ll break my position down so as to highlight assumptions and steps I make:

A) My first post suggested one philosophical job was “A central concern of cognitive philosophy is how to understand the relationship between perceiving, thinking, intentional humans (and animals, and potentially robots) and the underlying physical mechanisms of flesh and blood and brain (and potentially silicon).” Andy’s take on task (A) I agree with: ”any decent story needs to have understanding as a product, not an unanalysed cause “.

B) The neuroscientist’s job is different: roughly speaking: “What mechanisms in the human/rat brain allow the human/rat to see fried eggs, or to predict it is going to rain?” The test for B-success is whether the model predicts interesting things, including eg when perception in humans/rats breaks down.

C) The Roboticist’s job is also different, roughly: “What mechanisms can I build into the Google car so that it reacts appropriately to the stop light and predicts if that child is going to run across the road”. Test for C-success, how many humans/cars injured.

D) Both (B) and (C ) use homuncular metaphors such as “this bit sends a signal (eg of a prediction) to that bit of my model”. In Dennettian terms, they should always be able to discharge the homunculus. They usually (but not always) do this sensibly, aware of the limitations of such an approach — ultimately judged by their different tests-for-success.

E) Both (B) and (C ), if sensible, will not posit an *otherwise unexplained* “see-fried-egg” or “predict-child-running-into-road” module in their models. The cartoon, I hope, makes it clear how stupid that would be.

F) Task (A) can be rephrased as “how should we understand what is involved in discharging a homunculus” — since a homunculus is a very model of a cognitive mechanism. This implies that — for the purposes of task (A) — it is impermissible to posit as part of the explanation some homunculus that needs discharging, that is begging the question.

G) Andy insists on a theory with a notion of prediction as a central plank, where Inman claims either part of or the whole brain is being treated as a cognitive homunculus doing the predicting.

H) Andy attempts to avoid that homuncular charge by stating: “I [AC] think that there is a pattern of production and use within a system that can justify such talk without requiring that the parts of the systems that trade in the signals understand them as predictions, or as errors, or as anything at all.”

J) Andy, on challenge, fails to produce an operational definition of this — and gives the impression this is a minor inconvenience to be rectified later.

I) Since one can demonstrate that any such operational definition of prediction not using homunculi will inevitably lead to contradictions with the everyday sense of the word prediction, Inman considers Andy’s claim equivalent to “I can redefine 1 to equal 0”.

K) Andy is not doing a philosopher’s job (A). Specifically, though I agree with AC’s statement ”any decent story needs to have understanding as a product, not an unanalysed cause”, Andy cannot provide such a decent story this way. Andy’s project is not merely temporarily missing its central plank, it is fatally flawed.

Please don’t look for where we agree — point out just where we disagree. I really get the impression, when you suggest uncertainty as to what issues divide us, that you do not appreciate the depths of the disagreement! It is the difference between a pre-Copernican and a post-Copernican conception of cognition.

Cheers

Inman

Hi Inman,

I think the place we disagree is clear. In reply to your very first note I wrote that:

“I totally agree that we need to make sure any story about ‘predictive brains’ is fully and workably mechanistic. By that I mean just that the story can be implemented without mysterious black boxes that do anything like understanding. But as far as I can tell, nothing in the PP model contravenes that important requirement. For example, the idea of one ‘level’ predicting activity at the level below and then sending forward residual error requires only an automatic comparison and response operation, defined ultimately over physical states and events. Indeed, it had better be (in some sense) ‘automatic all the way down’ – after all, we are ultimately just physical stuff doing what it does. “

I think this requirement is visibly met by PP. You seem to think it isn’t, and that as a result there is some kind of threat of importation of undischargable homunculi. But since there are plenty of toy PP systems out there, that work without magic, I don’t see that worry at all.

There remains, I agree, an empirical question about whether the brain works in anything like the way any of those systems do. Work by Bastos et al and others is addressing that issue. This means that PP, whether right or wrong, is also allowing us to ask some pointed questions of the brain. Scientifically then, all’s well. I don’t see any fatal flaw!

Hi Andy,

Yes, you are right that at the centre of our differences are different interpretations of what it might mean for Mechanism M (eg a brain) to have a predictive component. I maintain that this means “We can interpret what is going on as some metaphorical person (homunculus) making predictions within some story that helps us make sense”; whereas as far as I can see you reckon there is an interpretation that does not posit such homunculi. I have challenged you to provide such an interpretation, in the form of an operational definition or operational test that decides which of mechanisms M1, M2, M3… are or are not predictive in your sense, and you have not provided one.

You seem to equate my view as somehow believing brains work by magic — not so! For instance, an example of prediction from early cybernetics would be anti-aircraft fire — roughly, predict where the plane will be in 5 seconds time and aim the gun to project a shot to the right place at the right time. (Clear parallels with David Attenborough’s lizard shooting out its long tongue at a passing fly). I reckon that with some assistance I could design something like that, it would be a mechanism fully explicable with no magic. But it would contain predictive parts in the sense that I had designed it that way, and the labels on my sketch plans would be clear evidence. Now actually, as a onetime evolutionary roboticist, I might have tried to evolve the mechanism rather than design it, in which case there would be no such labels; but still no appeal to magic or ectoplasm, just physics.

Your claim — as far as I can see — is that you can take 3 mechanisms (my designed anti-aircraft gun, my evolved anti-aircraft gun, and the lizard brain, say), all without labels, and categorise them each as either containing or not containing a predictive component — without appeal to any homunculi. I repeat my challenge — let’s see your operational test for deciding, a test that does not appeal to homunculi!

I *think* you consider ‘containing-a-predictive-component’ to be a natural kind that does not require any relation with a perceiving person or homunculus. Whereas I consider the *everyday* sense of prediction to be, as other cognitive terms, inherently relational (to a person or, in metaphor, to a homunculus). Taking relational terms (‘up’ wrt to UK/NZ, ‘stationary’ wrt to earth/sun) to be objective and non-relational leads to interesting errors (Flat Earth, pre-Copernican astronomy), which is why I call the view I *think* you have pre-Copernican. If I am right, then either (1) you are unable to meet my challenge for an operational test of ‘predictive_AC’ or (2) you may manage some technical non-relational(-to-a-homunculus) definition which can be shown contradictory to the everyday sense of the word prediction.

I could go into all sorts of further pragmatic arguments — eg about the difficulties of modular decomposition of complex systems, about how evolution would be wasteful to design things the ways humans do in modular fashion, about how recent Deep Learning successes look like moving away a bit from homuncular paradigms — suggesting that both natural brains and cutting-edge ANNs are unlikely to decompose in ways humans find attractive (maybe “all we have is an image of richly recurrent (and mysteriously asymmetric) dynamics”). But my primary issue here is philosophical, not pragmatic. If you cannot provide an operational test for your non-homunculus-positing sense of ‘prediction’, why should your arguments be taken seriously so long as they are based on an undefined concept? A test that says anything and everything contains predictions is of course vacuous — I suspect your difficulties may be at that end of the scale.

“I [AC] think this requirement is visibly met by PP. You [IH] seem to think it isn’t, and that as a result there is some kind of threat of importation of undischargable homunculi. But since there are plenty of toy PP systems out there, that work without magic, I don’t see that worry at all.” — ok, so my challenge should be easy, then???? :^)

Cheers — and Happy New Year!

Inman

Hi Andy/Inman,

If neural reuse/redeployment accounts (Anderson) are anything to go by, regarding the brains capacity to endogenously (top-down-wise) ‘highjack’ the same neural infrastructure normally involved in coping with the (bottom-up) incoming stream, then at least you have a mechanism for mental imagery- right? Wrong? Prediction would just be one among many roles that (mental) imagery takes on (vis. goal registration, planning, logical/counterfactual reasoning, rehearsal, mind wandering, thought/ imagination, languaging, (Kosslyn, Barsalou), etc.). Providing you also keep your ‘behavioural/conscious-level’ pants on; namely the agent as a whole is AWARE of this and you debar any intentional ascriptions to ‘Horacial’, sub personal shenanigans, then you’d be in the clear- wouldn’t you? The “representings” themselves would consist in precisely that activity. A ’la Inman’s schema this would read: A bit of neural infrastructure P, endogenously activated within the central nervous system of a person Q, is a predictive ‘re-presentation’ (simulation (Hesslow)/emulation (Grush)) of an event R, (normally ‘presented’ to the agent when having an actual perceptual/sensory encounter with an event of the type R’), to a consumer S (where S can be the same person as Q). No need for sub-personal producers and consumers, and definitely no need for symbols shuffling.

Dear James,

I think the neural re-use scenarios are a very good fit with the PP perspective. Given some new task, existing prediction machinery (adapted to another domain) might prove the best early means of reducing organism-salient PE. Those neural areas would then be the targets for further refinements so as better to serve the new goal/task.

Hi Andy,

thank you for this great post. One thing I have been wanting to ask you about is the relationship between predictive coding and Bayesian accounts of how the brain works. In the literature, one can get the impression that the two ideas amount to the same thing. But I wonder whether they can come apart: a brain could, in some sense, reduce prediction error without employing Bayes’ law (without doing bayesian updating). I am not sure whether you will touch on this issue in future posts. If you do so here or in the book, I look forward to reading your take on this issue.

If the two approaches can come apart, then one can also put pressure on the idea of a single, overarching brain mechanism. It could be that the brain is, in some sense, in the general business of reducing error, but it might do so by using different tricks in different domains.

Nico

Hi Nico,

Wonderful to hear from you. That’s a great issue to raise. I don’t have a clean answer, but here’s the way it currently looks to me. Familiar Bayesian stories are defined over ‘beliefs’, very broadly construed. They describe an optimal way to crunch together prior knowledge and new (e.g. sensory) evidence, working within some pre-set hypothesis space. It is well-known that brains cannot, in most realistic cases, implement full-blooded Bayesian inference. Still, there are various approximations available, and perhaps the brain implements one (or more) of them. The upwards and downwards message-passing regime posited by PP would be an apt enough vehicle for some such approximation.

However, all that leaves untouched what I see as the major challenge. The challenge arises because the PP stories depart from the familiar Bayesian core not merely in virtue of being constrained to approximate (that’s common enough) but in two further key places. First, they are as much about the generation of the ‘hypothesis space’ as they are about exploring it and updating within it. This is where unsupervised multi-level prediction-driven learning does special work. Second, they (PP-style brains) are ultimately – as per my second post – self-organizing around the demands of successful action rather than aiming for good descriptions or updated ‘beliefs’. They are self-organizing for cheap successful engagement rather than ‘descriptive fidelity’. That puts an importantly different spin on the story.

The upshot is that the value of the mapping from familiar Bayesian stories onto these dynamical, self-organizing, action-centric accounts is (to me, and more on some days than others!) unclear. I don’t doubt that such mappings exist. There are already Bayesian treatments that address each of these areas (for a lovely review, see William Penny’s “Bayesian Models of Brain and Behaviour”). But the more ‘cybernetic’ the vision of PP becomes, the less these mappings may be telling us.

That said, there is no doubt that Karl Friston – a major architect of the stories – thinks there is real value in applying the Bayesian perspective (in the guise of maximizing model evidence) to accommodate all cases where something self-organizes so as to temporarily resist the second law of thermodynamics – see for example his entertaining Neuroimage narrative “the history of the future of the Bayesian brain”

Hi Andy and Inam,

Thanks for these interesting posts.

Why not also take into account the fact that predictions and actions do not exist by themseves, that they are not for free ? (the same for interpretations).

Any agent (animal, human, robot) predicts and acts for some given reasons related to its nature. Animals predict and act to stay alive. Humans do it to be happy. And artificial agents do it in conformance with what they have ben designed and programmed for.

And this can be worded in terms of internal constraint satisfaction.

Animals: stay alive (individual & species).

Humans: look for happiness, limit anxiety valorize ego, …Artificial agents: as designed and programmed for.

Constraints being intrinsic to the agent for living ones and derived from designers for AAs.

This brings us to meaning generation for internal constraint satisfaction (https://philpapers.org/rec/MENCOI ). Agents generate meanings when receiving information that has a connection with the constraint they are submitted to. The generated meaning is used by the agent to implement an action satisfying the constraint.

Meaning generation looks to me as having a place when addressing prediction, action and embodiement.

Would you agree?

All the best

Christophe

Dear Christophe,

This sounds right to me. The physiological and behavioural needs and action repertoires of an organism place strong constraints on what it will need to predict and when. For the action-repertoire part, see “Predictions in the light of your own action repertoire as a general computational principle” König et al 2013. For the physiological needs part, see Seth “The Cybernetic Bayesian Brain”. This is also the kernel of the standard solution to the so-called ‘darkened room’ puzzles that I’ll mention in a later post.

Hi Andy, Inman, everyone

Just started to go through these highly instructive posts. Lovely stuff.

My take is that there remains a productive tension about whether ‘predictive processing’ views, that posit a functional architecture featuring things like ‘prediction error’ and ‘generative model’, are (i) simply off track, (ii) provide an empirically productive framework for studying embodied and embedded brains, and behavior, or (iii) are, when cashed out mechanistically, good approximations to things that brains actually do in order to help organisms get by. Dismissing (i) for the hell of it, the options are – broadly – framework vs mechanism. And here it is not possible to magic discriminative criteria out of a hat. Instead, we need explicit theories and models of how mechanisms that (approximately) implement predictive processing generate perception and action. This is a tough job and papers like Bastos et al are just the start. There are many options about how neural mechanisms may implement approximations to optimal Bayesian inference on the (unknown, hidden) causes of sensory signals, and probably none of them are correct. Let’s see how we get on. [here the mechanistic ‘cashing out’ seems key to avoiding the Horace problem]

On the other hand, these ideas shed much needed light on ‘top down’ dynamics in the brain during perception, cognition and action – so the framework is without doubt empirically productive. There is an exploding literature in cognitive neuroscience on the role of ‘expectations’ in perception, cognition, and action.

As kindly hinted by Andy, I think the real place this all comes together is when we understand generative models of sensory signals to be in the business of control and regulation (sensu Conant and Ashby, hence the ‘cybernetic’ BB) and not in the business of representation.

Hi Anil,

Where you talk of ideas such as predictions, Bayesian inference etc in the brain as being empirically productive, I agree with you in so far as the ultimate tests are whether neuroscientists models or roboticists models are successful at their respective jobs.

I am glad you brought up Conant and Ashby, since this is an excellent clear example of the error I am accusing Andy of making. Taking their 1970 paper “Every Good Regulator of a System must be a model of that System”, this is not computational neuroscience (they propose no actual models of human/rat brains), it is not robotics (they propose no circuits to go in a Google car) — rather they tackle what I broadly call a philosophical question, of the same class that I have been guessing (perhaps wrongly) that Andy is attempting.

Unlike Andy, they are prepared to define carefully their key terms, so one can follow their reasoning. The formal proof is, to my mind, perfectly satisfactory, and the title of their 1970 paper “Every Good Regulator of a System must be a model of that System” is a perfectly reasonable summary of what they prove. **BUT THEN**, in the Abstract, in the Conclusions and throughout they completely misrepresent what they have proved as being the necessity of **making-a-model**. Now being-a-hat and making-a-hat are two very different things, and having-a-hat-inside yet a third; the same with models. I’m generally a fan of Ashby, but this is one crass mistake he makes here — and once it is pointed out it is rather difficult to see how they got away with this error.

If we could get to see Andy’s definitions, it probable he is making a version of this Conant and Ashby mistake — it is a classic error!

Inman

Dear Inman and Anil,

Interesting exchange!

I think the Conant/Ashby story proves too much, at least if ‘model’ was to be taken in any strong sense. The Watt Governor regulates, but it models the regulated system (the loom, say) only in a rather weak and (to me) uninteresting sense.

However, systems that do fancier things (for example, deciding on future actions by exploring possibilities in their imagination) may need to model in a richer sense. And that seems to be the sense implied by Anil, and Karl, in their comments concerning ‘counterfactual prediction’ – see my reply to Anil in installment 2 (conservative vs radical).

Thanks for the probing questions Inman…and happy holidays!