This post ends with a brief discussion about anxiety about the internal. I take that anxiety to arise when we see strong arguments for the idea that theories cannot successfully posit non-reducible mental states that provide distinctive causal explanations. The idea that the causal powers producing our beliefs, actions and emotions are series of neural excitations and nothing more can certainly make the mental look epiphenomenal. And it leads many, I think, to feel that if they cannot get states such as beliefs and desires into the head, then we are left with a bleak view of ourselves as little more than automatons.

We will start with a very brief summary of one point from the previous post. Then we will discuss radically difference senses of “representation,” one dominant in neuroscience and the other in contemporary philosophy of mind. We will next look at the argument that we do not have a successful theory giving us inner mental states with contents. This discussion leads into the discussion of the anxiety about the external.

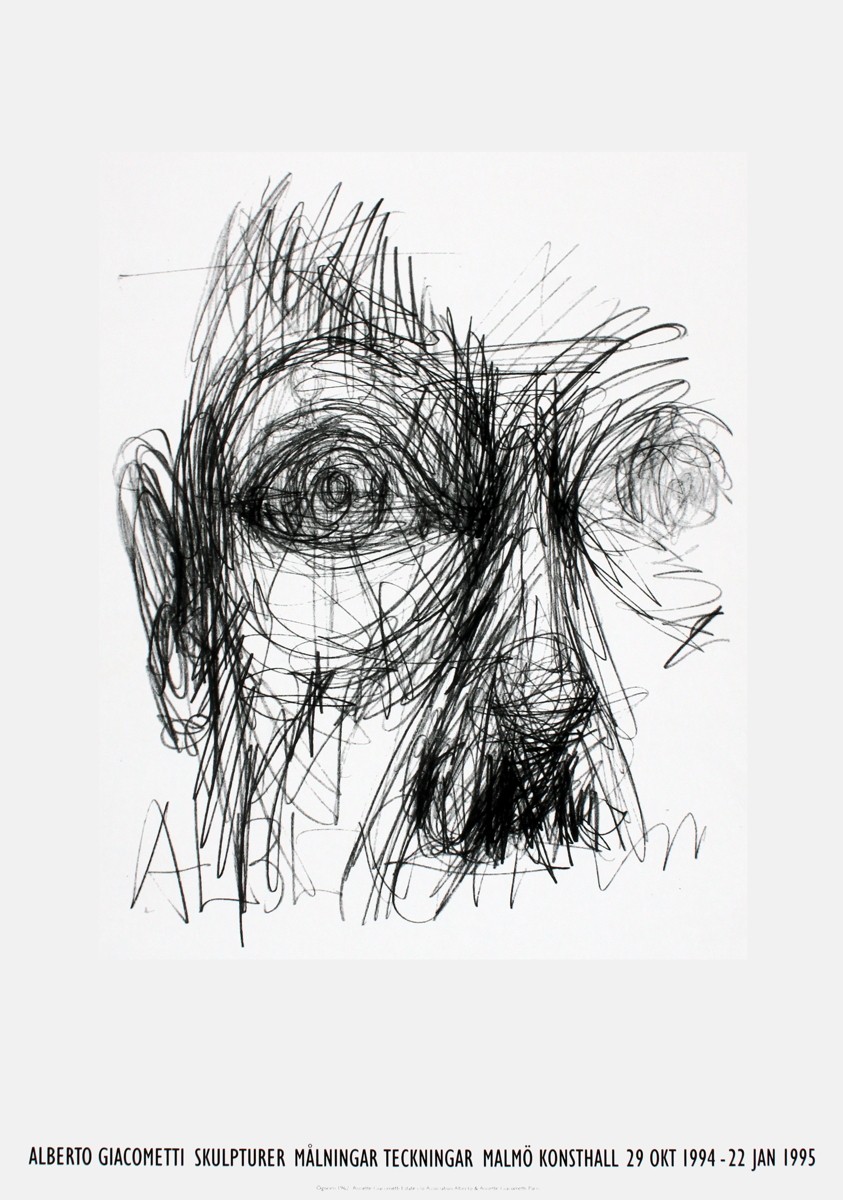

We’ll end with the merest glimpsed of one topic for the next post. The glimpse is a photograph of the very naughty Rusty, the red panda that escaped from the national zoo.

——————————————————

The main theories of affordances we have looked at have the motivation for our movement coming in the first instance from the environment. A different approach would have the motivational power located in us. Supposing we grab at a piece of chocolate cake, the first kind of theory encourages the explanation that the cake called to us, presented itself as irresistible, etc. Theories of the second sort emphasize the internal state we brought to the cake as the motivations source. In the second case, it may be part of the story that one really longed for chocolate cake before one was even in its vicinity. But can we locate a desire for a piece of chocolate cake in the mechanism we looked at that depended largely on bursts of dopamine?

Desires are also thought to be mental representations. Before we address them directly, we should look at representations in cognitive neuroscience (CNS) and contemporary philosophy. The two fields, I want to suggest, are employing ‘representation’ in very different ways. Hence, the cognitive scientist’s insistence that there must representations of goals need not be denying Dreyfus’ claim that there need not be any such representations. Rather, they are speaking of different things.

CNS draws on a model of representations that has been around since the days of Aristotle. On this older model, to represent something is to realize it, exemplify it or even copy it. And exemplifying something or copying it is quite different from being about it, as the newer sense takes representations to be. Thus a copy – a color sample – can represent the color I painted my study, though the sample is hardly about my study. I might take a paper to represent my work at its best; the paper may not be about my work, but it exemplifies my best work. Similarly, a protest may represent something an administration feared would happen without being about their fears. The protest might instead be described as realizing their worst fears.

One place where we find the older model is in Aquinas’ philosophy. Uses of the model will vary with one’s ontology, and in Aquinas’ philosophy, we find a robust conception of matter and form. In his philosophy, then, perception and cognition about a cat involve realizing the cat in one by realizing the relevant sensible and intelligible species. In my next post here on the brains blog, we will see the results of interpreting his accounts of perception and cognition in terms of our very different contemporary philosophical notion of representation. For now, we can see the color of the cat and the essence of the cat as both forms which can be instantial in different objects. As I see the cat and recognize it as a cat, the sensible ‘spleckled grey’ gets realized in my sensory system, while the essence of the cat is realized in my intellect.

Neither of the realizations will mean we can find any physical trace of the cat in one’s head. Rather, the sensible and intelligible species are realized in ways that are specific to perception and cognition. (One might find this explanation circular.) How could Aquinas’ idea translate into the ontology and terminology of CNS? Oddly enough, a recent passage in an article about a new development in CNS could be designed to answer just this question. Thus Nikolaus Kriegeskorte, Mur, and Bandettini (2008) note:

Naively: The representation is a replication of the object, i.e., identical with it. (Problem: A chair does not fit into the human skull.) More reasonably, we may interpret first-order isomorphism as a mere similarity of some sort. For example a retinotopic representation of an image in V1 may emit no light, be smaller and distorted, but it does bear a topological similarity to the image. More cautiously, we could maintain that first order isomorphism requires only that the representation has properties (e.g., neuronal firing rates) that are related to properties of the objects represented (e.g., line orientation). While the naive interpretation is clearly untenable, the other interpretations are generally accepted in neuroscience. We concur with this widespread view, which motivates studies of stimulus selectivity at the level of single cells and brain regions. However, we feel that analysis of the second-order isomorphism (which can reflect a first-order isomorphism) is equally promising and offers a complementary higher-level functional perspective.

Notice that the passage takes the view of representation presented as standard in neuroscience. Nonetheless, we need to be careful about how we interpret the above passage. The isomorphism is not a simple structural isomorphism; rather, the idea is that the resulting representation will have a value for each causally registered feature. Which features actually get so registered is an important question for understanding perceptual experience.

The role of similarity is very prominent in a quickly developing area of cognitive neuroscience that seeks to bring together three major branches of systems neuroscience research: brain-activity measurement, behavioral measurement, and computational modeling (N. Kriegeskorte & Kievit, 2013; Laakso & Cottrell, 2000; Mur et al., 2013). The key to achieving a unified picture from such disparate sources resides in the similarity obtaining among the “representations.” What is looked for is an isomorphism between stimulus and initial responses, and then a second-order similarity between the responses as they evolve. The analysis we are looking at focuses at first on a kind of similarity among stimuli, but then moves to look at a second order similarity among neural reactions. In many theories, the second order similarity is given by looking at distance matrices, which tell us which reactions are differentiated responses to a stimulus. What is important to us is that the move from similarity of stimulus to similarity of reactions does not give us semantic content. A major part of the reason for this is that similarity does not give us bivalent satisfaction conditions.

I don’t have the space or the mathematical skills to elucidate the representational-similarity theory very fully, but it should be clear that it allows for what neuroscientists mean when they say things such as:

Research repeatedly points to the prefrontal cortex as a site where goals are formed, selected, and actively maintained. … Currently, our best idea is that goals are prepresented by recurrent patterns of neural activity that are stable for some time and can be distinguished from ongoing background activity. (Montague, 2007)

Goals realized in the brain are recurrent patterns.

There are some important questions we might ask of similarity accounts. One concerns truth. Similarity is ill-suited to give us bivalent truth-conditions. But many theorists think that getting to the truth is a major point of perception. The second concerns what we can call ‘individuation’. We might think of this second problem as coming in two forms. The first concerns the definiteness that mental representations are thought to make available. A burst of dopamine may come from a reliable conjunct of a piece of chocolate cake, but it does not have the indexical element that desires with propositional content can have. We might say that the content of a desire can contain a specification that one wants this piece of cake. Secondly, a sample can be a sample of an indefinite number of things. That is why, we can remind ourselves, Goodman thought that a sample or example was such only if a symbolic system could be used to specify what the sample or example is of (Goodman, 1968).

I do not have the space to address these problems here. I’ve tried to do so elsewhere. For truth, see https://annejaapjacobson.files.wordpress.com/2014/03/vision-theory-ajj.pdf. And the questions of individuation are discussed here: https://empiricalphilosophy.wordpress.com/2014/11/27/paper-response-to-the-natural-origins-of-content-by-hutto-and-satnet/.

Desire:

We can see much in the philosophy of mind as treating desires as supervening on neural processes of the general sort we have described, if not with the details we have provided. There is, however, a problem with this. Desires are typically thought to have contents, which provide satisfaction conditions. Accounts of how internal states get the sort of content desires seems to have – such as the desire that I fill up on chocolate or the desire that I rest on the sofa – aim at giving us the truth-conditions for ascriptions of such desires to their possessor. That is, they tell us that, for example, my desire is a desire for chocolate because of how evolution created my tastes or how I was taught to identify things. But even if the account gets the truth conditions right, we need to ask whether what is achieved is anything like a causal element in the scenarios we have been looking at.

If the theorist bringing in desires sees them as reducible to the neural mehanisms we are describing, there does not appear to be a problem with their being causes. Rather, there is a problem with their literally having content. If desires have to have content, arguably reductive desires are not really desires.

[Much of what follows here is new and has received not critical feedback. I’d love to hear what you think about it.]

Non-reductive accounts, with their content, do present a very serious problem. The problem is that the elements typically referred to as what creates the content do not add to the causal powers present in the neuro-scientific reductive base. Let us consider the teleological/learning accounts first. The problem can be illustrated by considering Prinz’s improvement of Dretske’s account. Consider, then, the (Prinz, 2000) offered amendment of Dretske’s account:

… the real content of a concept is the class of things to which the object(s) that caused the original creation of that concept belong. Like Dretske’s account, this one appeals to learning, but what matters here is the actual causal history of a concept. Content is identified with those things that actually caused incipient tokenings of a concept (what I will call the ‘incipient causes’), not what would have caused them. (P. 249)

Neither the things that originally caused the tokening nor the causal history of the concept add causal properties to those already present in the reductive base. Similarly, if we want to trace neutrally the path from the frog’s sighting of a speck to its zapping it with its tongue, conjectures or even known facts about evolutionary history are beside the point.

The problem here might be seen as inspired by Kim’s causal exclusion problem (Kim, 2007). However, it seems to me that what I am doing is undercutting the source of the more metaphysical problems Kim finds. These metaphysical problems arise with some kind of overdetermination that non-reductive accounts of the mental may be thought to bring in. I have given reason for thinking that while they do add new truth-conditions, they do not add additional causal powers. That said, appeals to desires may have additional explanatory power. They set the agents and their actions in the context of norms arising from a number of sources, including social.

Explanations in terms of desires, we might say, can refer to the supervenient base or a social context or both. Many, many things get placed in such positions, birthday cakes among them. A birthday cake may have been a perfect gift except for the fact that eating it caused its recipient a terrible rash. Psychoanalytic concerns aside, it is a good bet that the rash was caused by physical composition of the cake, but its being a perfect gift – at least until sampled – concerns its position in a social setting.

Explanations of beliefs, actions and emotions in terms of standard reasons are not, I have argued elsewhere, causal explanations. These arguments are in two papers I link to in the comments on my first post. This is not to say that the neural mechanisms cannot have effects that we do describe as the effects of mental states. Just as the cake can cause a rash, a strong desire might make one shake or cause one to forget something else.

I think there may be a mistake cropping up at various points in the history of philosophy. The mistake we might put as “reading ontology off of logical/semantical form.” This mistake, if mistake it is, is very easily committed with causal statements. Hume took ordinary singular causal statement to provide the ontology onto which natural necessity would or would not fit. (It didn’t fit.) Davidson took ordinary psychological explanations in terms of beliefs and desire to be causal explanations of actions. That involves understanding propositional attitudes as items in our heads, a task at which arguably we have yet to succeed.

Having felt for decades the urgency of finding a place for beliefs, desires and other psychological states in the physical world that does not leave them without any causal role, I am now puzzled by that feeling. I suspect that it rests on my missing a distinction between the sub-personal level described in terms of micro-entities and the sub-personal level described in terms of the interests of the person. Once neuroscience has advanced enough, I think we can lose what we might think of as the anxiety of the inner. That is the anxiety felt that if we cannot get personal traits inside us to have their effects on our muscle movements, among other things, we will be like primitive robots. It seems unpalatable to think of us just as buffeted by these surges of dopamine. But once we realize that the dopamine is part of a very complicated system involving vision and movement, among many other things, which is attuned to provide for basic survival in our niche, “buffeting” seems less appropriate. Furthermore, as our experience extends beyond that of infancy, the dopamine system can respond to new interests, including those taught by our environment. Further, we can of course exercise some control over it, even to the extent to checking ourselves into rehabilitation clinics.

In my next post, I’ll be looking at some more historical issues, but we will also consider some contemporary problems with accounts of concepts, such as Prinz’s amendment of Dretske’s. Red pandas provide a good example for a concept that eludes some standard treatments. We’re ending now with a picture of a very naughty red panda strolling down a sidewalk in D.C. after it escaped from the national zoo.

References

Goodman, N. (1968). Languages of art; an approach to a theory of symbols. Indianapolis,: Bobbs-Merrill.

Kim, J. (2007). Causation and Mental Causation. In B. P. McLaughlin & J. D. Cohen (Eds.), Contemporary debates in philosophy of mind. Malden, MA: Blackwell Pub.

Kriegeskorte, N., & Kievit, R. A. (2013). Representational geometry: integrating cognition, computation, and the brain. Trends in Cognitive Sciences, 17(8), 401-412. doi: 10.1016/j.tics.2013.06.007

Kriegeskorte, N., Mur, M., & Bandettini, P. A. (2008). Representational similarity analysis – connecting the branches of systems neuroscience. [Original Research]. Frontiers in Systems Neuroscience, 2. doi: 10.3389/neuro.06.004.2008

Laakso, A., & Cottrell, G. (2000). Content and cluster analysis: Assessing representational similarity in neural systems. Philosophical Psychology, 13(1), 47-76. doi: 10.1080/09515080050002726

Montague, R. (2007). Your brain is (almost) perfect: How we make decisions. New York: Penguin Group.

Mur, M., Meys, M., Bodurka, J., Goebel, R., Bandettini, P. A., & Kriegeskorte, N. (2013). Human object-similarity judgments reflect and transcend the primate-IT object representation. Frontiers in Psychology, 4. doi: 10.3389/fpsyg.2013.00128

Hi Anne! Trying this nifty new comment feature. My understanding of the Kriegeskorte stuff is that “isomorphism between stimulus and initial responses” doesn’t play a role at all – they’re just looking at similarities and differences in how a system treats a range of stimuli. Have I got that wrong?

But can’t similarity give us accuracy conditions? (As long as there is a definite target for the representation, and some measure of closeness to the target.) These are are pretty close to truth conditions – certainly a sort of content. Maybe you’ll get into this in a subsequent post.

Neither the things that originally caused the tokening nor the causal history of the concept add causal properties to those already present in the reductive base.

I have long been confused by Davidson’s Swampman thought experiment in which he essentially says that two instances of Davidson, realDD and a molecularly identical copy – swampDD – that replaces realDD, are “psychologically different”. Eg, swampDD can’t “recognize” realDD’s friends since he has never actually “cognized” them, notwithstanding that swampDD’s behavior is indistinguishable from what would have been realSS’s behavior. I view us as robots (though not primitive but incredibly complex) the actions of which are implemented by context-dependent behavioral dispositions implemented in neuronal structures that emerge from a combination of nature (innate) and nurture (learned via plasticity). If so, since in a given context at a given time those structures completely define our behavior, I don’t understand Davidson’s claim. Although a fan of his, I suspect he was subject to what I see as an unfortunate tendency in the field to mix the two descriptive vocabularies to which you allude. As Ramberg points out in his “Rorty and His Critics” essay, they are intended for quite different purposes and so have different realms of applicability.

I read the above quote from your post and the sentence about Davidson and psychological vs causal explanations as supporting my position. I’ve read an objection or two to Davidson’s position, but none as succinct as yours. So, I really hope I’m reading you correctly as I could use some positive reinforcement.

As for being “buffeted”about, I think what saves us is that the buffeting – the cause/effect or context/behavior relation – is too complex to admit prediction, either from a first or third person perspective. So, what we do continues to surprise, ourselves no less than others (although, our long-term significant others are perhaps surprised less often than they’d like!)

Anne, As I eat the chocolate cake I realize that it is actually made from sawdust and the icing is a plastic compound with a strong vinegar taste. I immediately stop eating it because it is not chocolate cake and my desire was in fact the experience of eating chocolate cake. Are not desires and beliefs previous experience or future hopes so in fact I never desired the piece of cake? But the experience?

The cake is actually currency or holds a belief or value. In fact prefrontal activity is actually a mediator of experience so in fact I can train it to mediate the value of the experience of eating the cake versus my daily Weight Watchers Points Value, I may feedback experience into my emotions in the form of guilt that I went off my diet? Or I might just sit back and light up a cigarette after enjoying it?

I’ve been caught up in non-philosophical tasks all afternoon; I’ll try to make a start on answers, but may not get far before dinner! If your comment isn’t discussed right away, do know I’ll get to it tomorrow morning.

Dan, I’m not sure what the important of your comment is. That is, “My understanding of the Kriegeskorte stuff is that “isomorphism between stimulus and initial responses” doesn’t play a role at all – they’re just looking at similarities and differences in how a system treats a range of stimuli.”

They do want very systematic similarities and differences so that we can ask, e.g., whether – and if so how – the processing of artificial kinds differs from that of natural kinds. So the nature of the input and its origin are important.

So maybe your question is whether the isomorphism is important. I think it is. What they want is an initial response that reflects the whole array of traits of an object that are affecting our reaction.. Further, they want a two-way inference: from stimulus to (eventually) the stages of processing and from stages of processing back to features of the stimulus. (I’m being sloppy here, since some traits are lost in processing, etc.) That makes it seem as if they need to start off with something deserving to be called ‘isomorphism’, I think.

About truth – I need to think. I do think it’s a mistake to think that, e.g., vision evolved to get us the truth about the details in our environment. There is also so much ‘wrong’ with our perception if we think that going for the facts is a good thing, since we miss out on a great deal. Given the effects of attention on what we register, should we say most of our perception is false? I’m not sure here. Equally, it isn’t clear what space in our visual perception really is. Arguably, at one stage it is Newtonian but more Einsteinian at a latter stage. So where would truth be?

Perhaps a more serious question is what one would get by saying that similarity is pretty much truth.

I wonder if I should eat dinner before I try to say anything about your interesting questions!

On the initial isomorphism: I might just be confused about terminology here. How is the isomorphism between stimulus and initial response any different from a simple informational relationship? An example of a simple informational relationship would be: degree of neural response carries information about level of brightness, say. But there need be no natural correspondence here, the transformation can be gerrymandered (low brightness–> medium activation; high brightness–> low activation, etc). We have information, but no isomorphism except in a trivial sense. Kriegeskorte et al. don’t seem to need a more substantial isomorphism between stimulus and initial processing than this. This also seems consistent with the two-way inference you mention above.

On truth vs. accuracy: I just meant that accuracy gets you something like content in the form of accuracy conditions. A toddler’s blocky airplane toy is less accurate than a detailed hobbyist’s model, given a particular type of plane as target. What counts as adequate accuracy depends on context. Lower accuracy is fine for the toddler, but not for the hobbyist. Similarly for vision. Note that there’s no level of accuracy where you suddenly cross the line from falsehood to truth–those concepts don’t get a grip here. But it still seems that each model would be associated with accuracy conditions, which are relevantly like truth conditions for this to count as content in a modern sense.

This would of course require correspondence rules: which relations in the representation are supposed to correspond to which relations in the target. For the model planes, spatial relations in the model are supposed to mirror spatial relations in the target. I’d say teleology furnishes those (as well as the identity of the target), but there are alternatives. Maybe you would just deny that there are any such correspondence rules for vision? (I’m not sure if the Newtonian vs. Einsteinian contrast you point to lies in different correspondence rules, or in different accuracy counting as adequate. But it doesn’t seem to undermine the very existence of accuracy conditions.)

In short: do you think vision can be more or less accurate, even if “true” doesn’t belong here? If so, it seems to me that you’re committed to saying that visual states can have content in a modern sense. (Which might mean we need not move to the Aristotle/Aquinas notion of representation.)

Dan, I’m trying to remember just why I took a theoretical turn against truth, as it were. I think there were – and still are – a number of reasons why it seemed to me better to locate truth further down the line in beliefs or states at that sort of cognitive level.

1. Isomorphism is cheap and plentiful, much more so than one would think truth is. I don’t want blades of grass, for example, being accurate about other blades or getting them right or whatever.

2. When we look at fairly simple cases where we have fairly engaged intuitions, it seems to me we don’t really want to talk in terms of truth or falsity, or even being accurate. Thus our ability to pick up others emotions varies according to the recipient, but it is also easy to make mistakes in different cultures and so on. Picking up someone’s anger, then, seems to me a paradigm of the representation-by-copying model; I take it here that if I pick up someone’s anger than to some extent I feel angry. But of course what I experienced as anger might actually have been something else, including strong desire. Do we want to say that the anger is false or inaccurate. That seems to me wrong; what can be false or inaccurate is the possibly belief I’ll have about what the person is feeling.

3. Interestingly, philosophers who take basic sensory-cognitive states to be re-realizations (examples, copies, etc) often deny that the inner state is true or false. This list includes Aristotle, Locke and Hume. Thus:

While Locke says:

Any idea then which we have in our minds, whether conformable or not to the existence of things, or to any idea in the minds of other men, cannot properly for this alone be called false. For these representations, if they have nothing in them but what is really existing in things without, cannot be thought false, being exact representations of some thing: Nor yet, if they have any thing in them differing from the reality of things, can they properly be said to be false representations, or ideas of things they do not represent. (Locke: ECHU PT 2 Ch. 32.21)

And Hume:

Our ideas are copied from our impressions, and represent them in all their parts. When you would any way vary the idea of a particular object, you can only encrease or diminish its force and vivacity. If you make any other change on it, it represents a different object or impression.

This seems spot on for mirroring cases of actions and emotions. So if one want a unified theory, this is a reason for extending the idea.

Finally, my basic task is about what is going on in cognitive neuroscience, and so it is descriptive at bottom. Philosophy of mind comes in when I/we ask what we can successfully add to a project concerned with the causes of, e.g., my grabbing the cake. And though the neuroscientists sometimes talk with the philosophers, their examples are very, very often closer to my sort of cases. It is, of course, possible that Kriegeskorte would say that if we could get the chair in our head, or a duplicate of a chair, then we’d have a true or accurate chair with content, but that seems unlikely to me. (This may not be much of an argument.)

In Keeping the World in Mind I went through quite a few neuroscientific examples using “represent” or “representation” in the actual literature, searching with Web of Science. Then I tried to reconstruct them in terms of being about, having content, etc., and it just did not work. Another example from an SPP conference: a neuroscientist was talking about how we represent sentences in our heads. I asked him if he meant that we had brain states that were about the sentences on, e.g., a page and accurate or inaccurate representations of them. Or did he mean that we had something like sentence-equivalents in our heads. He emphatically chose the latter. That seems right. The thing in our heads seems to be about something – maybe “The cat is on the mat” – but it isn’t about the sentence of on table.

Similarly for isomorphism. I’m not sure about other relations doing the job as well, since the job being done has to fit diserata coming from all over the place, where one can start to generalize about the results of single cell recordings, fMRI readings, etc. But even if one can, my project has a strongly descriptive base.

I hope this helps. I’m quite happy to leave your project without substantive objections, since I think we’re doing different things.

Thanks so much for the thoughtful reply – yes, it does indeed help. I think you’ve probably hit the nail on the head when you say that we’re engaged in different projects.

Two questions to which I’m not really expecting answers (after all, you have other posts to write!). 1) Do you ever worry that the descriptive project could fail simply because the neuroscientists et al. are somewhat confused in their use of the term “representation”? Perhaps they use it in many varied ways, while not clearly distinguishing among them. 2) If an emotion has the function of simulating someone else’s emotion, doesn’t it make sense to talk about its accuracy?

Charles, thanks for your agreement. I think we could see the examples Davidson picks out as distinguishing the two as relational. Swamp Man will also not have DD’s parents, for example.

My psychological explanations are in effect, I think, relational. That’s part of why I think they are not all in the head, pace Rupert et al.

VicP, you might be interested in Read Montague’s work on hidden biases. His examples deal with art objects, but the point carries over to biases doctors may pick up from having received nice lunches from pharmaceutical companies. There’s interesting stuff about the prefrontal cortex and how expertise can protect us from hidden biases. So there’s room for your training.

The papers are on his official website, and pretty easily identified by their titles. Anne Harvey is the first author on one.

Davidson’s examples of swampDD’s psychological differences were failure to re-cognize friends, failure to really know their names, failure to really know the same meaning of a word that realDD did. – all cognitive differences. And according to Sellars, cognition is relational in the sense of being a matter of social practice – the asking for, and giving of, reasons for what one says. But that’s behavior, and swampDD behaves exactly as realDD would have – ie, he knows all the same things.

That may be an example of one problem when mixing vocabularies. One tries to express a word from the psychological vocabulary using words from the physical vocabulary. Then when the latter doesn’t quite fit the sense of the former, the redefinition is declared a failure – ignoring that there will always be a difference since the vocabularies are for different purposes. If one could successfully redefine words from the psychological vocabulary using the physical vocabulary, at least among the cognoscenti there would be no need for the former. Perhaps something like that problem is what you have in mind by “reading ontology off of logical/semantical form”.

Parenthood is also cognitive in a sense – something one knows. But only an omniscient entity can know that realDD’s parents are not swampDD’s because there are no witnesses to the transformation, friends will detect no behavioral differences, DNA will disclose no genetic differences, etc.

Thanks Ann for the reference to Read Montague. Haven’t read his work yet but started by watching his TED Talk https://blog.ted.com/2012/09/24/12-talks-on-understanding-the-brain/

Agree with his insights of currency, valuation and presence of most of our brain structures are for social interaction.

As an aside wonder if you catch Aaron Sorkin’s Newsroom series on HBO. It’s a treasure trove of human social interaction across groups (liberal, conservative, mid-western, British, young, old, male, female…).

Awesome post. As crucial as anxiety might be on the motivational side of these issues, the bottom-line is that it really has nothing to do with the issue at hand, and should be viewed as a potential source of bias. As a researcher, one should be deeply suspicious of any ‘folk efficacy intuition.’ Ontologically, we have the way that biomechanical explanations distribute efficacies. Empirically, we have the growing mountain of ‘ulterior functions’ discovered in cognitive science. Historically, we have ample examples of the way science overthrows even our most cherished prescientific intuitions. Would it be fair to say that the only thing really warranting scientific (as opposed to personal/interpersonal) attributions of folk efficacy–the spooky functions attributed to beliefs, desires, goals, etc.–are our intuitions and the traditions raised about them?

The fact that intentional idioms do work in specialized contexts in no way evidences the extraordinary and naturally inexplicable properties human reflection seems prone to attribute them. If anything, it argues their heuristic nature (https://scientiasalon.wordpress.com/2014/11/05/back-to-square-one-toward-a-post-intentional-future/). Once this abductive door is slammed shut, intuition is all the intentionalist has left, isn’t it? What role do you think our introspective appraisals have to play in this debate?

Awesome comment! I think that attributions of psychological states are – at least many of them – tied to our language use. In fact, I’m inclined to think philosophers do too often read an ‘explanation’ of an assertion off of the assertion itself. So my saying “I see an apple” means that I believe I see an apple and that belief is explained by the existence in my of a state with that content. I don’t think that by itself gets us spooky properties, but it does not seem like a good way to construct explanations. One bad thing is that we get causal chains of entities that would slow us down considerably.

At the same time, I think that with an intentional language we will have need for what look like mental state ascriptions, but they are not about inner causes. They are instead ways of situating us in a norm-rich environment, to put it roughly. Kristin Andrews work on this is just great, I think. She stresses the many different kinds of things that are appealed to in so-called folk psychological explanations. If I hear a number of ambulances and see someone running, the explanation of her running might be that she’s a nurse and need to get to the hospital right away. This kind of explanation, appealing to her job and what it requires, seems to me close to how we should understand explanations of that appeal directly to desires, such as “She wants to help.”

I think we’re close to agreement. I will check out your link.

Thanks for the comment.