In my final post I consider whether all our visual cues to scale function at the level of cognition rather than vision, and the kind of theory that ‘vision without scale’ would imply.

1. Physiological Cues

There are two aspects to 3D vision: shape and scale. So far we have discussed questions regarding shape; specifically, which cues contribute to the perception of 3D shape, and which cues merely contribute to its cognition? But the question of scale is just as important; namely, can we differentiate a small object up close from a large object far away?

It is at this stage that vision science typically shifts from optics (analysis of the retinal image) to physiology (analysis of muscular sensations). Since Kepler (1604) and Descartes (1637) the emphasis has been on two specific physiological cues to distance:

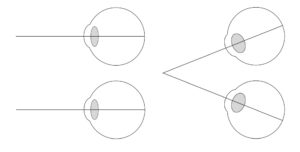

1. Vergence: The closer an object is, the more the eyes have to rotate towards each other in order to fixate upon it.

2. Accommodation: The closer an object is, the more the lens in the eye has to increase in power to bring the object into focus.

2. Accommodation: The closer an object is, the more the lens in the eye has to increase in power to bring the object into focus.

The general consensus is that vergence is a relatively strong cue to distance, at least in reaching space (with a change of 10cm in the vergence-specified distance of the target equating to an average perceived change of 8.6cm: see Mon-Williams & Tresilian, 1999), whilst accommodation is a relatively weak cue to distance in reaching space (with a change of 10cm in the accommodation-specified distance of the target equating to an average perceived change of 2.7cm: see Fisher & Ciuffreda, 1988). The orthodox position is therefore that the visual system uses vergence to scale the distance, size, and even the depth, of objects in the visual scene (Foley, 1980).

The general consensus is that vergence is a relatively strong cue to distance, at least in reaching space (with a change of 10cm in the vergence-specified distance of the target equating to an average perceived change of 8.6cm: see Mon-Williams & Tresilian, 1999), whilst accommodation is a relatively weak cue to distance in reaching space (with a change of 10cm in the accommodation-specified distance of the target equating to an average perceived change of 2.7cm: see Fisher & Ciuffreda, 1988). The orthodox position is therefore that the visual system uses vergence to scale the distance, size, and even the depth, of objects in the visual scene (Foley, 1980).

But the worry I explore in my book is that experiments on vergence as a distance cue typically rely on having subjects in a dark room, and then suddenly presenting them with objects as close as 20cm, which they then have to suddenly converge upon. Testing vergence in this way is going to introduce a number of confounding cues:

1. Diplopia: Initial double vision of the stimulus, which is known to affect distance judgements (Morrison & Whiteside, 1984).

2. Change in the Retinal Image: The subject is going to see the stimulus going from double to single as they converge upon it.

3. Proprioceptive sensation: Subjects will be directly aware of the physical sensation of their eyes suddenly rotating.

In my book I suggested that these confounding cues for vergence, as well as the equivalent confounding cues for accommodation (initial defocus blur in the stimulus before the eye has had a chance to accommodate), might be functioning as cognitive cues to distance, enabling subjects to judge distance based on (a) the maximum distance in the apparatus, minus (b) some distance depending on the amount of diplopia or blur initially seen.

Since vergence is the most well-established distance cue in vision science, this was the most controversial claim in my book. But since the publication of the book I’ve had the chance to evaluate this hypothesis experimentally, and what we find is very interesting:

1. I replicated Mon-Williams & Tresilian (1999) whilst removing diplopia, and the 12 subjects I tested fell into two categories: 10 subjects with virtually no benefit from vergence (average perceived change of 0.75cm for every 10cm change in the vergence-specified distance) and 2 subjects with a very high correlation (average perceived change of 9.87cm for every 10cm change in the vergence-specified distance).

2. The two high performing subjects seemed to be responding to vergence / accommodation conflict in the apparatus (the fact that their eyes were focused at one distance, but crossed at a different distance: https://www.wired.com/2015/08/obscure-neuroscience-problem-thats-plaguing-vr/), so I ran a second experiment with this conflict largely eradicated, and the overall average performance was very modest (1.6cm for every 10cm change in the vergence-specified distance), and even this modest performance doesn’t appear to be visual (instead, subjects reported they could feel their eyes tensing when the target was at close distances).

For those that are interested, this work is summarised in a poster: https://linton.vision/download/vr-poster.pdf (Frontiers in Virtual Reality, University of Rochester, June 2018).

2. Do We See Scale?

But if these experiments show that vergence and accommodation are ineffective cues to distance, then what else could be providing the distance information that the visual system needs to scale the scene? Both al-Haytham (c.1021) and Helmholtz (1866) appealed to the size of familiar objects, but Descartes (1637) argued that the size of familiar objects only functions as a cognitive cue to distance, and since Gogel (1969) this has generally been recognised to be the case. As Gogel explains:

‘The distance reports made under these conditions probably should be considered to be inferential rather than perceptual, i.e. more determined by the tendency to report that an object is distant because its perceived size is small than to report the distance actually perceived.’

Which leads to the following thought: What if all of our distance cues are either (a) ineffective (vergence, accommodation), (b) merely relative (angular size, diplopia), or (c) merely cognitive (familiar size)? In what sense could it really be claimed that we ‘see’ scale, rather than merely cognitively infer it post-perceptually?

3. Vision Without Scale

But how should we make sense of the idea of vision without scale? A good starting place is Emmert’s Law: Emmert (1881) observed that if we see a flash, experience an afterimage, and then look at a real-world scene, the after-image is seen as being at the distance we fixate: the afterimage looks like a small patch up close when we fixate on our hands, and a large patch far away when we fixate on the horizon.

But under my account Emmert’s Law gets things the wrong way around: Instead of (1) seeing objects in the scene at various fixed distances, and then the plane of fixation (and therefore the afterimage) jumping back and forth in distance with fixation, we (2) always see the plane of fixation (and therefore the afterimage) as being at the same fixed phenomenal depth, and it is the objects in the scene that jump back and forth relative to this fixed phenomenal depth as we change our fixation. Such an account is equally consistent with Emmert’s observation.

But it would be a category mistake to try and attribute a single fixed physical distance to the fixed phenomenal depth of the fixation plane. Instead, the physical distance we attribute to the fixation plane is constantly changing on the basis of a number of cognitive cues: (1) the size of familiar objects, (2) even the size of unfamiliar objects (i.e. how much of the visual field they take up: see Tresilian, Mon-Williams, & Kelly, 1999), (3) the amount of blur in the visual field (as employed in tilt-shift miniaturisation: https://commons.wikimedia.org/wiki/File:Jodhpur_tilt_shift.jpg) and also (4) how flat the scene looks (recalling that binocular disparity falls off with distance).

But does this theory have any intuitive appeal? I think so. When we watch a movie, the movie is always at the same fixed phenomenal depth: the screen never appears to move towards us or away from us. And yet the fact that the movie is always at the same fixed phenomenal depth in no way impedes our enjoyment of the film: the movie can transition from wide-panning shots of a cityscape to close-ups of a face without this transition being jarring. Attributing a variety of different scales to scenes viewed at a single fixed phenomenal depth is therefore something that seems natural to humans.

Where it does become a problem is if we have to integrate vision with other senses (e.g. touch) that do have a sense of scale. This might help to explain why it is relatively easy to break the link between tactile location and visual location in the rubber hand illusion.

4. Conclusion

I want to thank John Schwenkler for giving me this opportunity to explore visual space and the perception / cognition divide. I hope these posts have convinced you that only by redrawing the boundary between perception and cognition can we hope to resolve the tensions and inconsistencies in visual space.

References

al-Haytham, Ibn. (c.1021). Kitab Al-Manazir (Book of Optics). In A. I. Sabra (Ed.), The Optics of Ibn al-Haytham (1983). Kuwait: National Council for Culture, Arts and Letters.

Descartes, R. (1637). ‘Optics.’ In Cottingham, Stoothoff, & Murdoch (eds.), The Philosophical Writings of Descartes, Vol.1 (Cambridge: Cambridge University Press, 1985).

Emmert, E. (1881). ‘Größenverhältnisse der Nachbilder.’ Klinische Monatsblätter für Augenheilkunde und für augenärztliche Fortbildung, 19, 443-450.

Fisher, S. K., & Ciuffreda, K.J. (1988). ‘Accommodation and apparent distance.’ Perception, 17(5), 609-21.

Foley, J. M. (1980). ‘Binocular distance perception.’ Psychological Review, 87(5), 411-434.

Gogel, W. C. (1969). ‘The effect of object familiarity on the perception of size and distance.’ Quarterly Journal of Experimental Psychology, 21(3), 239-47.

Helmholtz, H. von (1866). Handbook of Physiological Optics, Vol.3 (Leipzig: Leopold Voss).

Kepler, J. (1604). Optics (Santa Fe, NM: Green Lion Books, 2000).

Mon-Williams, M., & Tresilian, J. R. (1999). ‘Some recent studies on the extraretinal contribution to distance perception.’ Perception, 28(2), 167-181.

Morrison, J. D., & Whiteside, T. C. (1984). ‘Binocular cues in the perception of distance of a point source of light.’ Perception, 13(5), 555–566.

Tresilian, J. R., Mon-Williams, M., & Kelly, B. M. (1999). ‘Increasing confidence in vergence as a cue to distance.’ Proceedings of the Royal Society B, 266(1414), 39-44.

Your “movie watching” scenario is a good one as it demonstrates the sophistication of the human visual system. The fact that we can correctly interpret scale objects in the movie AND surrounding the TV screen (not a movie theater as it is usually too dark to see much beside the movie) really demonstrates your thesis.

However, the brain is even more flexible than that might indicate. It uses all the information that our sense apparatus can differentiate in order to settle on scale and other attributes (eg, color). It seems very likely that this is accomplished by some sort of voting mechanism. Scale information is taken from multiple sources, combined with our expectations, and the strongest, most consistent interpretation wins. And, therefore, no single source of scale information wins all the time.

Thanks Paul, I really appreciate your comment! Yes, you are right that no single source of scale information wins all the time. A good illustration of this is a well-constructed Ames Room with false perspective:

https://en.wikipedia.org/wiki/Ames_room

It is startling how easily familiar size, which is the dominant cue to size in many contexts, is rendered redundant by the false linear perspective in the scene, and we see children towering over adults, even though we know this cannot be the case:

https://www.physics.umd.edu/deptinfo/facilities/lecdem/services/avmats/slides/thumbs.php?title=O4

So you’re right, scale has to be context specific. The reason I focused so heavily on vergence in my post is that it promised to be a genuinely visual cue to scale, unlike e.g. familiar size which appears to operate post-perceptually. Once we discount vergence as a distance cue, it is far from clear that there is any cue to scale that operates at the level of vision rather than at the level of our cognitive understanding of the scene.

Thanks again for your comment, really appreciate it!

Thank you for these posts, that I’ve read with most interest trying to understand as much as I could, in particular to better understand my own condition.

In fact, I was born with strabismus and although I’ve got surgery to get my eyes straight, my brain never acquired 3D vision.

Sometimes I wonder what are the things I’m missing, and how much of an handicap this is. I live a totally normal life and there aren’t things other do that I can’t do (apart from enjoying a 3D movie – and I didn’t try VR yet), apart maybe catching a flying object but this could depend on my motor skills (reflexes and coordination) which aren’t really good on their own already.

What I caught from your writings was that there’s no clear answer, for we don’t really know what creates 3D vision.

I’d like to know more about this topic and would ask you for telling me your experience with people with non stereoscopic vision and this ‘handicap’, and possibly some accessible reading about it. Thank you!

Thank you Matteo for your wonderful question.

1. In terms of the extent to which the absence of binocular stereopsis can be a deficit, you’re quite right that for the vast majority of everyday tasks the effect appears to be relatively minimal. Indeed, my recollection is that John P. McIntire at the US Airforce Research Laboratories had a study where one of the participants only found out they were stereo-blind during screening. I haven’t worked with strabismic subjects myself, but I work alongside Simon Grant at City, University of London, and his 2006 article with Dean Melmoth is still very much the go-to review of the literature:

https://www.researchgate.net/publication/7446083_Advantages_of_binocular_vision_for_the_control_of_reaching_and_grasping

2. In terms of what binocular stereopsis is for, you’re quite right, that’s an open question. Bela Julesz argued that its purpose was to break camouflage for a stationary observer – think a predator hiding in the undergrowth. But other influential accounts, such as James Gibson’s, had little role for binocular stereopsis. Gibson was influenced by the fact that Wiley Post, the first person to fly around the world, was blind in one eye. There are also a number of world-class athletes such as Wesley Walker who lack stereo-vision:

https://www.nytimes.com/1983/09/13/science/visual-cues-compensate-for-blindness-in-one-eye.html

Jenny Read has an excellent discussion of ‘what stereoscopic vision is good for’?

https://www.jennyreadresearch.com/download/basic_science/stereopsis/Read2015_SDA.pdf

3. In terms of accessible reading, I think the two accounts that might be most of interest are Sue Barry’s book ‘Fixing My Gaze’:

https://www.amazon.com/Fixing-My-Gaze-Scientists-Dimensions/dp/0465020739/

And Bruce Bridgeman’s account of gaining binocular stereopsis from watching a 3D movie:

https://bsandrew.blogspot.com/2012_03_01_archive.html

Both are accounts by vision scientists who gained binocular stereopsis later in life. The emphasis on Barry’s account appears to be more experiential (focusing on the way things look) rather than on the absence of binocular stereopsis as a performance deficit.

I hope you enjoy!