I want to thank John Schwenkler for inviting me to blog about my new book The Perception and Cognition of Visual Space (Palgrave, 2018). In this first post I outline the two major concerns of 3D vision: (1) inconstancy, and (2) inconsistency, and suggest that inconsistency can be avoided by reformulating the perception/cognition boundary.

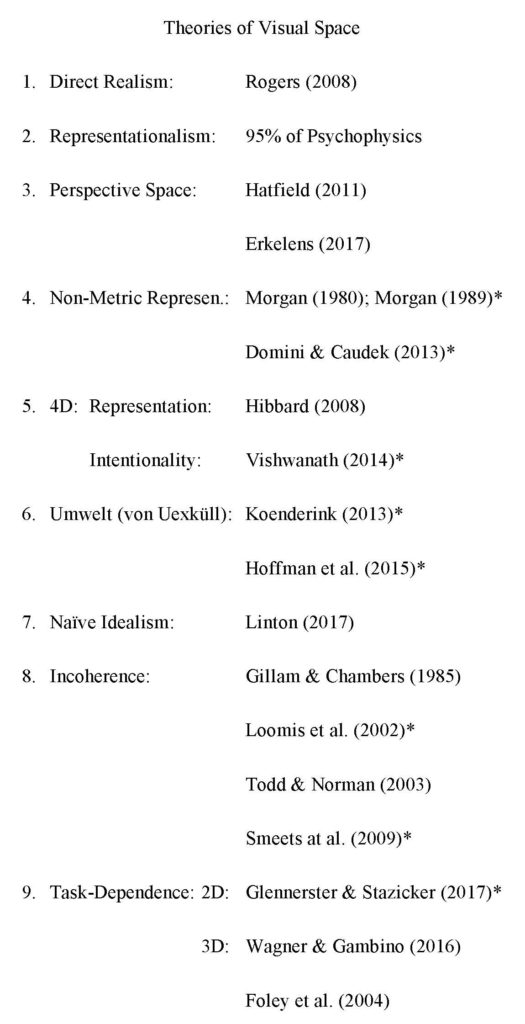

1. Theories of Visual Space

3D vision is going through a period of transition. In the space of 20 years it has gone from being a central concern of predictive models of the brain (Knill & Richards, 1996) to not being mentioned at leading conferences (https://sites.google.com/view/tpbw2018/). If you think of 3D vision in Representational terms (as the extraction and representation of the 3D properties of the physical environment), it would appear that most of the interesting questions have been resolved and the discipline is in decline.

And yet 3D vision remains plagued by two fundamental concerns:

- Inconstancy: The 3D properties of an object or scene can vary depending on (a) the distance it is viewed from, and (b) whether it is viewed with one eye or two.

- Inconsistency: The 3D properties of an object or scene can vary depending on the question being asked or the task being performed.

Representational accounts struggle to fully address these concerns, and so in recent years a number of alternative accounts of 3D vision have emerged:

Apart from Direct Realism and Representationalism, the common theme is a shift away from the veridical representation of the 3D properties of the physical environment (see Albertazzi et al., 2010; Wagemans, 2015). In the case of the accounts marked with a *, 3D vision is refined for action in, rather than knowledge of, the environment (reflecting a more general ‘pragmatic turn’ in cognitive science: see Engel et al., 2013; Engel et al., 2016).

2. The Perception/Cognition Divide

In this initial post I want to use the problem of inconsistency to introduce the distinction between ‘perception’ and ‘cognition’ that runs through my book. This distinction is not one that vision science has traditionally embraced. There are three reasons for this:

- Historical: Under behaviourism, perception was equated with ‘discrimination’ (see Miller, 2003). Although the cognitive revolution of the 1950s emphasised the importance of perception, it nonetheless remained tied to cognition under the catch-all term ‘perceptual judgement’. Also a commitment to viewing ‘the organism’ holistically (Goldstein, 1934) has continued to have influence.

- Practical: It is extraordinarily difficult to isolate our perceptual experiences from our judgements about them. Experimenters can either rely on (a) the subject’s own evaluation of the stimulus or (b) a behavioural measure (such as reaching or grasping). But both subjective evaluations and behaviour can be affected by cognitive as well as perceptual influences.

- Theoretical: Finally, vision scientists have self-consciously avoided the question. For instance, Marr (1982) was only concerned with the true judgements we could extract about the environment, and consequently relied heavily upon computer vision where there is simply no distinction between perception and cognition (computer ‘vision’ simply is the fact that a computer ‘judges’ x).

It is true that in recent years vision science has begun to take the distinction between perception and cognition more seriously, at least in two contexts:

- Whether we can distinguish the decision strategies adopted by subjects from their actual perception: see Witt, Taylor, Sugovic, & Wixted (2015).

- Whether there is any meaningful distinction between perception and our beliefs, desires, and emotions: see Firestone & Scholl (2016).

But in the first chapter of my book (which is freely available: https://linton.vision/download/ch1uncorrected.pdf) I argue that such treatments don’t go far enough: First, I argue that there is a distinct level of cognition that is post-perceptual but pre-decisional, and so cannot be captured by the false dichotomy between ‘perception’ and ‘decision strategies’. Second, I argue that the distinction between perception and this species of cognition is surprisingly low-level (much lower than the ‘top-down’ influences Firestone & Scholl have in mind) and this leads us to question the fundamental building-blocks of vision itself, such as pictorial cues and cue integration.

Underpinning my account is the belief that we cannot distinguish ‘perception’ and ‘cognition’ in the abstract. Asking whether we ‘see’ higher-level properties such as causation, intention, or morality is meaningless until we have a proper litmus-test by which to understand just how low-level cognition can be. This can only really be achieved in the context of 3D vision, where we can tease out the lowest-level conflicts between what we think we see and what we actually see.

3. Inconsistency

Readers of this blog will be familiar with the idea that our depth judgements are inconsistent (https://philosophyofbrains.com/2017/12/12/new-action-based-theory-spatial-perception.aspx). I want to consider three examples of this inconsistency:

- Todd & Norman (2003): Subjects were asked to judge the depth of a rotating surface defined by (a) monocular motion (motion viewed with one eye), (b) binocular disparity (the difference in the images between the two eyes) or (c) the combination of binocular disparity and motion (disparity and motion viewed with two eyes). They ranked the depth in the monocular motion display as greater than the depth in the combined display. But when subjects were asked to close one eye whilst viewing the combined display (turning it back it into the monocular motion display) they reported a reduction in depth. Todd & Norman rightly recognised that this demonstrated a conflict between our perceptual experience (combined display > monocular motion) and our perceptual judgement (monocular motion > combined display). They explain it as a consequence of conscious decision strategies; a reflection of the fact that subjects had to convert the perceived depth into metric measurements. But this concern applies equally to both of the displays, so there is no reason why it should have inverted the depth ordering between them.

- González, Allison, Ono, & Vinnikov (2010): Subjects were asked to judge the impression of looming from (a) a dot whose looming motion was specified by binocular disparity, and (b) a background texture whose looming motion was specified by a rapid increase in size. When the two cues were combined in the same display subjects (a) judged the looming from binocular disparity to be equal in magnitude to the looming from the size cue (‘they appeared to move a similar amount in depth’), and yet (b) their visual experience was that the motion of the dot could be discriminated distinctly, and in front of, the motion of the texture.

- Doorschot, Kappers, & Koenderink (2001): Subjects were asked to judge how varying (a) shading and (b) binocular disparity affects the perceived depth in a stereo-photograph. Doorschot et al. found that 94.5% of the depth was perceived no matter how shading and disparity were varied, and that binocular disparity could only account for 1.2% of the change in depth. But as Doorschot et al. recognise, the difference in depth from closing one eye when viewing a stereo-photograph seems much more than 1.2%. Instead, it seems to transform the image.

How should we explain the conflicting reports in each of these studies? One common suggestion (see Norman et al., 2005; Wagner & Gambino, 2016; Glennerster & Stazicker, 2017) is that we ought to give equal weight to each of these conflicting reports, and so we have to give up on the idea that 3D vision is independent of our task or motivation.

By contrast, I argue that in each of these experiments what we find is a conflict between (a) our perceptual judgements (our impression of depth magnitude) and (b) our actual visual experience (as revealed by a simple comparison: e.g. closing one eye in Todd & Norman, 2003 and Doorschot et al., 2001, or attending to the difference in depth between the dot and the texture in González et al., 2010). The only way to account for this conflict between visual experience and perceptual judgement is to posit the existence of a low-level layer of post-perceptual (i.e. cognitive) processing that is automatic (we don’t have to do anything), unconscious (we are unaware of it), and involuntary (we cannot overrule it).

The purpose of my book is to explore experimental strategies that might tease apart our genuine visual experience from this post-perceptual processing, and I argue that the unconscious inferences attributed to 3D vision by a long tradition stretching from al-Haytham (c.1028-38) to Cue Integration (Trommershäuser, Körding, & Landy, 2011) are better thought of in terms of automatic post-perceptual processing.

References

Albertazzi, L., van Tonder, G. J., & Vishwanath, D. (2010). Perception Beyond Inference (Cambridge, MA: MIT Press).

al-Haytham. (c.1028–1038). Book of Optics. In Smith, A. M. (ed.) (2001). Transactions of the American Philosophical Society, 91(5).

Domini, F., & Caudek, C. (2013). ‘Perception and action without veridical metric reconstruction: an affine approach.’ In Dickinson, S. J., & Pizlo, Z. (eds.), Shape Perception in Human and Computer Vision (London: Springer).

Doorschot, P. C. A., Kappers, A. M. L., & Koenderink, J. J. (2001). ‘The combined influence of binocular disparity and shading on pictorial shape.’ Perception & Psychophysics, 63(6), 1038-1047.

Engel, A. K., Maye, A., Kurthen, M., & König, P. (2013). ‘Where’s the action? The pragmatic turn in cognitive science.’ Trends in Cognitive Sciences, 17(5), 202-9.

Engel, A. K., Friston, K. J., & Kragic, D. (2016). The Pragmatic Turn (Cambridge, MA: MIT Press).

Erkelens, C. J. (2017). ‘Perspective Space as a Model for Distance and Size Perception.’ i-Perception, Nov-Dec 2017.

Firestone, C., & Scholl, B. J. (2016). ‘Cognition does not affect perception: Evaluating the evidence for “top-down” effects.’ Behavioral & Brain Sciences, 39, e229.

Foley, J. M., Ribeiro-Filho, N. P., & Da Silva, J. A. (2004). ‘Visual perception of extent and the geometry of visual space.’ Vision Research, 44, 147-156.

Glennerster, A., & Stazicker, J. (2017). ‘Perception and action without 3D coordinate frames.’ PhilSci Archive: https://philsci-archive.pitt.edu/13494/

Gillam, B., & Chambers, D. (1985). ‘Size and position are incongruous: measurements on the Müller-Lyer figure.’ Perception & Psychophysics, 37(6), 549-556.

Goldstein, K. (1934). The Organism: A Holistic Approach to Biology Derived from Pathological Data (New York: Zone Books).

González, E. G., Allison, R. S., Ono, H., & Vinnikov, M. (2010). ‘Cue conflict between disparity change and looming in the perception of motion in depth.’ Vision Research, 50(2), 136-43.

Hatfield, G. (2011). ‘Philosophy of Perception and the Phenomenology of Visual Space.’ Philosophic Exchange, 42(1), 3.

Hibbard, P. B. (2008). ‘Can appearance be so deceptive? Representationalism and binocular vision.’ Spatial Vision, 21(6), 549-559.

Hoffman, D. D., Singh, M., & Prakash, C. (2015). The interface theory of perception. Psychonomic Bulletin & Review, 22, 1480-1506.

Knill, D., & Richards, W. (1996). Perception as Bayesian Inference (Cambridge: Cambridge University Press).

Koenderink, J. J. (2013). ‘World, environment, Umwelt, and innerworld: A biological perspective on visual awareness.’ Human Vision and Electronic Imaging XVIII, 8651.

Linton, P. (2017). The Perception and Cognition of Visual Space (London: Palgrave).

Loomis, J. M., Philbeck, J. W., & Zahorik, P. (2002). ‘Dissociation between location and shape in visual space.’ Journal of Experimental Psychology: Human Perception and Performance, Vol 28(5), 1202-1212.

Marr, D. (1982). Vision: A Computational Investigation into the Human Representation and Processing of Visual Information (Cambridge, MA: MIT Press).

Miller, G. A. (2003). ‘The cognitive revolution in historical perspective.’ Trends in Cognitive Sciences, 7(3), 141-144.

Morgan, M. J. (1980). ‘Phenomenal Space.’ In Josephson, B., & Ramachandran, V. (eds.), Consciousness and the Physical World: https://philpapers.org/archive/JOSCAT-2.pdf

Morgan, M. J. (1989). ‘Vision of Solid Objects.’ Nature, 339, 101-103.

Norman, J. F., Crabtree, C. E., Clayton, A. M., & Norman, H. F. (2005). ‘The perception of distances and spatial relationships in natural outdoor environments.’ Perception, 34, 1315-1324.

Rogers, B. (2018). Perception: A Very Short Introduction (Oxford: Oxford University Press).

Smeets, J. B. J., Sousa, R., & Brenner, E. (2009). ‘Illusions can warp visual space.’ Perception, 38, 1467-1480.

Todd, J. T., & Norman, J. F. (2003). ‘The visual perception of 3-D shape from multiple cues: Are observers capable of perceiving metric structure?’ Perception & Psychophysics, 65(1), 31-47.

Trommershäuser, J., Körding, K., & Landy, M. S. (eds.) (2011). Sensory Cue Integration (Oxford: Oxford University Press).

Vishwanath, D. (2014). ‘Towards a New Theory of Stereopsis.’ Psychological Review, 121(2), 151-78.

Wagemans, J. (2015). ‘Alternatives to Veridicalism in Vision Theory’: https://www.gestaltrevision.be/pdfs/jw_lectures/ECVP_2015_veridicality_Wagemans.pdf

Wagner, M., & Gambino, A. J. (2016). ‘Variations in the Anisotropy and Affine Structure of Visual Space: A Geometry of Visibles with a Third Dimension.’ Topoi, 35(2), 583-598.

Witt, J. K., Taylor, J. E., Sugovic, M., & Wixted, J. T. (2015). ‘Signal Detection Measures Cannot Distinguish Perceptual Biases from Response Biases.’ Perception, 44(3), 289-300.

Thank you for the following question via email. I’m posting the answer here as it may be of general interest:

Question: “The revelation is that you argue that the post-perceptual, cognitive processes engaged are automatic, unconscious & involuntary. But can any of those last 3 processes be said to be cognitive? My working definition would be that the word assumes conscious access and an attentional ‘off’ button.”

Answer: I think there are two ways of addressing this concern:

1. We typically think there is a meaningful boundary between ‘perception’ and ‘cognition’. One approach, as you quite rightly identify, is to define ‘cognition’, and then regard anything that doesn’t live up that criterion as ‘perception’. An alternative, which is my approach, is to see if a process can be accounted for within perception? If it cannot, and appears to involve an additional, further level of processing, then it has to fall the other side of the boundary.

But this isn’t an arbitrary distinction. To give an example of why this matters. Hillis et al. (2002): https://science.sciencemag.org/content/298/5598/1627.long find that estimates of the slant of a surface from touch and vision are not fused together: you can attend to one, or you can attend to the other. And yet, when asked to estimate surface slant, subjects will typically provide an average between the two. Now the question is where does this average exist? It doesn’t exit in our vision, which reports the visual estimate. Nor does it exist in our touch, which reports the touch estimate. So there doesn’t seem to be any way to fit it into our perception. Instead, it appears to be an understanding of the stimulus that we could have come to consciously, but in fact come to unconsciously.

2. In a sense the argument is easier than that because the literature increasingly attributes very thick cognitive concepts to the human visual system, for instance “causation” and “other minds”. A notable example is Cavanagh (2011) on “visual cognition”: https://www.sciencedirect.com/science/article/pii/S0042698911000381?via%3Dihub, who is one of the targets of my Ch1 (see pp.12-15). For instance, he concludes:

“…the unconscious inferences of the visual system may include models of goals of others as well as some version of the rules of physics. If a ‘Theory of Mind’ could be shown to be independently resident in the visual system, it would be a sign that our visual systems, on their own, rank with the most advanced species in cognitive evolution.”

So a great deal of the literature is already attributing cognition to the human visual system (there are more modest elements in the earlier literature, e.g. al-Haytham, c.1028; Helmholtz, 1866; Gregory, 1966; Rock, 1983), so my job is to convince them that although these cognitively-seeming processes do go on, they are best thought of as a distinct 3rd category, independent of perception (that precedes them) and conscious deliberation (that follows them).

Thanks for these posts Paul. You provide a lot of material to discuss and fortunately there is a lot that I agree with including some of your more unorthodox views but there are also some quite serious problems. I’ll focus on just one here and maybe raise some more if we make any headway.

You claim that depending on our POV etc. “The 3D properties of an object or scene can vary…”. Let me try to bring out the weakness in this claim by way of a couple of absurd examples that follow from the logic of your account. According to your view, when my colleague pops his head around the door, I see a disembodied head, and when I see my son from the side, I see a one eyed boy.

The inconstancy claim is a misunderstanding stemming from the fact that we depiction-users can represent 3D space on a 2D plane. Let me put it another way for emphasis. When we say that a distant barn “looks small”, the “looks small” is obviously not a perceptual claim about the size of the barn. It’s an depiction user’s claim about how the barn could be viably represented according to techniques with which we are familiar. Without those techniques to supplement our discursive repertoire there could be no such claims about distant things appearing small, dry things looking wet, straight things looking bent and films looking like they contain moving objects etc.

Let’s take another instance where your position raises obvious absurdities. Let’s say that I hold up a Rubik’s cube and turn it around. According to your account, the properties of the cube vary. But this manifestly false. The properties remain constant and I can feel this constancy with hands. If your account were true, there would be a worrying disparity between the varying shapes presented to my vision and the constancy of shape presented to my touch but there isn’t. I have a unified perception of an object of constant size and shape.

Thanks ever so much for these comments Jim, I really appreciate them! And I’m glad that there’s a lot we agree on!

1. I guess I don’t find your absurd scenarios absurd. Without overemphasising the analogy, much like a computer rendering a virtual scene, I would argue that the human brain has to render our perceptual scene. And this, indeed, is what is rendered: a disembodied head, or a one eyed boy. Your examples refer to amodal completion, which you are entitled to refer to as ‘seeing’, but the fact they are referred to in the literature as ‘amodal’ points to the suggestion that others wouldn’t regard them as such.

2. On whether we see distant objects as small, I think we do. Oliver Sacks in ‘The Minds’ Eye’ (2010) refers us to a passage from Colin Turnbull’s ‘The Forest People’ (1961), p.251: https://archive.org/stream/forestpeople00turn/forestpeople00turn_djvu.txt This is of course just an anecdote, but I think it is illustrative:

“Then he saw the buffalo, still grazing lazily several miles away, far down below. He turned to me and said, ‘What insects are those?’”

“At first I hardly understood; then I realized that in the forest the range of vision is so limited that there is no great need to make an automatic allowance for distance when judging size. Out here in the plains, however, Kenge was looking for the first time over apparently unending miles of unfamiliar grasslands, with not a tree worth the name to give him any basis for comparison. The same thing hap pened later on when I pointed out a boat in the middle of the lake. It was a large fishing boat with a number of people in it but Kenge at first refused to believe this. He thought it was a floating piece of wood.”

3. I think familiarity has a role to play here in shaping your grasping expectations. But Campagnoli, Croom & Domini (2017) is an interesting paper on the kinds of distortions we are liable to experience in grasping unfamiliar objects (in so far as they manipulate the separation between the bars being grasped for): https://jov.arvojournals.org/article.aspx?articleid=2650868

Thank you ever so much Jim, I really appreciate it!!

Thanks Paul, I half suspected that the force of my comments about my disembodied colleague and one-eyed son would be regarded as being of little consequence for the inconstancy thesis. But my point is that these examples follow as a logical consequence of the claim that the properties of things vary as we move around them etc. We know this isn’t true. You stack the argument in your favour by omitting the standard account which is usually described in the literature as object constancy or property constancy.

Sacks describes an unequivocal instance of perceptual failure, not perceptual success. So it is false to suggest that the question “What are those insects?” indicates an instance of seeing insects. Quite clearly it doesn’t.

I started writing replies to your other interesting remarks but I think we probably need to stick with the main point. For me most of problems in your approach arise from your tendency to regard the reports that people give of the things they look at as accounts of perceptual achievements. So when someone says that a person viewed from the side looks like a person with one eye, you treat this as a perceptual claim. Worse still you claim that a person viewed from the side has the “property” of being one eyed. That’s both silly and misleading.

Hi Jim, thank you ever so much for these comments!

You’re quite right that I downplay the standard accounts of object constancy, such as 3D shape constancy, because I genuinely believe that 3D objects do change their shape with viewing conditions. I argue that is the only way to make sense of Johnston (1991)’s finding that the 3D shape of a cylinder is liable to vary with distance:

https://linkinghub.elsevier.com/retrieve/pii/0042-6989(91)90056-B

Or Thouless (1931)’s finding that the 3D shape of objects is liable to vary depending on whether they are viewed with one eye or two:

https://onlinelibrary.wiley.com/doi/abs/10.1111/j.2044-8295.1931.tb00597.x

This is the central theme of my 4th post:

https://philosophyofbrains.com/2018/06/28/perceptual-idealism-and-phenomenal-geometry.aspx

I don’t discuss size constancy a great deal, except to argue that it is merely a cognitive effect. See the discussion of the cars in part 3 of my 2nd post:

https://philosophyofbrains.com/2018/06/26/perceptual-integration-and-visual-illusions.aspx

I really appreciate your further comments. I guess part of my project is to question whether thinking about the properties of visual objects as constantly changing really is silly and misleading. This relates back to my discussion in my 4th post that I don’t believe that vision makes any claims about the physical world, which may well be constant.

“that is the only way to make sense of Johnston (1991)’s finding that the 3D shape of a cylinder is liable to vary with distance”

Really? Are you so sure that there aren’t other more parsimonious ways to make sense of Johnson’s finding and many others like it? Here’s one. People know very well that things don’t alter in shape and size as they get further away but they also know that the further something is the less we see if it. Animators exploit this understanding. When they produce films of objects moving into the distance they have to make the drawings gradually diminish in scale to produce the illusion of an object receding into the distance.

Once again, your account has absurd and logically unacceptable consequences. If an object recedes so far into the distance that we can no longer see it, on your account it changes shape so much that it actually vanishes out of existence. On everybody else’s account, we simply can’t see it any more. Your view pays an unacceptably high price in order simply to deal with—much less to explain—compromised perceptions of the world.