Expecting Ourselves?

What does it take to be a creature that has some sense of itself as a material being, with its own concerns, encountering a structured and meaningful world? Such a being feels (from the inside, as it were) like a sensing, feeling, knowing thing, and a locus of ‘mattering’. In Surfing Uncertainty I describe (see previous posts) an emerging bundle of research programs in cognitive and computational neuroscience that – and I say this with all due caution, and a full measure of dread and trepidation – may begin to suggest a clue. I don’t think the clue replaces or challenges the other clues emerging in contemporary neuroscience. But it may be another step along the road.

Seeing Things

To creep up on this, we need to add one final piece to the jigsaw of the predictive brain. That piece is the variable weighting of the prediction error signal. To get the flavor, consider the familiar (but actually rather surprising) ability of most humans to, on demand, see faces in the clouds. To replicate this, a multi-level prediction machine reduces the weighting on a select subset of ‘bottom-up’ sensory prediction errors. This is equivalent to increasing the weighting on your own top-down predictions (here, predictions of seeing face-like forms). By thus varying the balance between top-down prediction and the incoming sensory signal, you are enabled to ‘see’ face-forms despite the lack of precise face-information in the input stream.

This mechanism (the so-called ‘precision-weighting’ of prediction error) provides a flexible means to vary the balance between top-down prediction and incoming sensory evidence, at every level of the processing regime. Implemented by multiple means in the brain (such as slow dopaminergic modulation, and faster time-locked synchronies between neuronal populations) flexible precision-weighting renders these architectures spectacularly fluid and context-responsive. Much of Surfing Uncertainty is devoted to this mechanism, and (as previously mentioned) it’s potential role in explaining a wide range of phenomena from ordinary perception in the presence of noise, to delusions, hallucinations, and the distinctive cognitive and experiential profiles of a variety of non-neurotypical (for example, autistic) agents.

Sentient Robotics (1.01 beta)

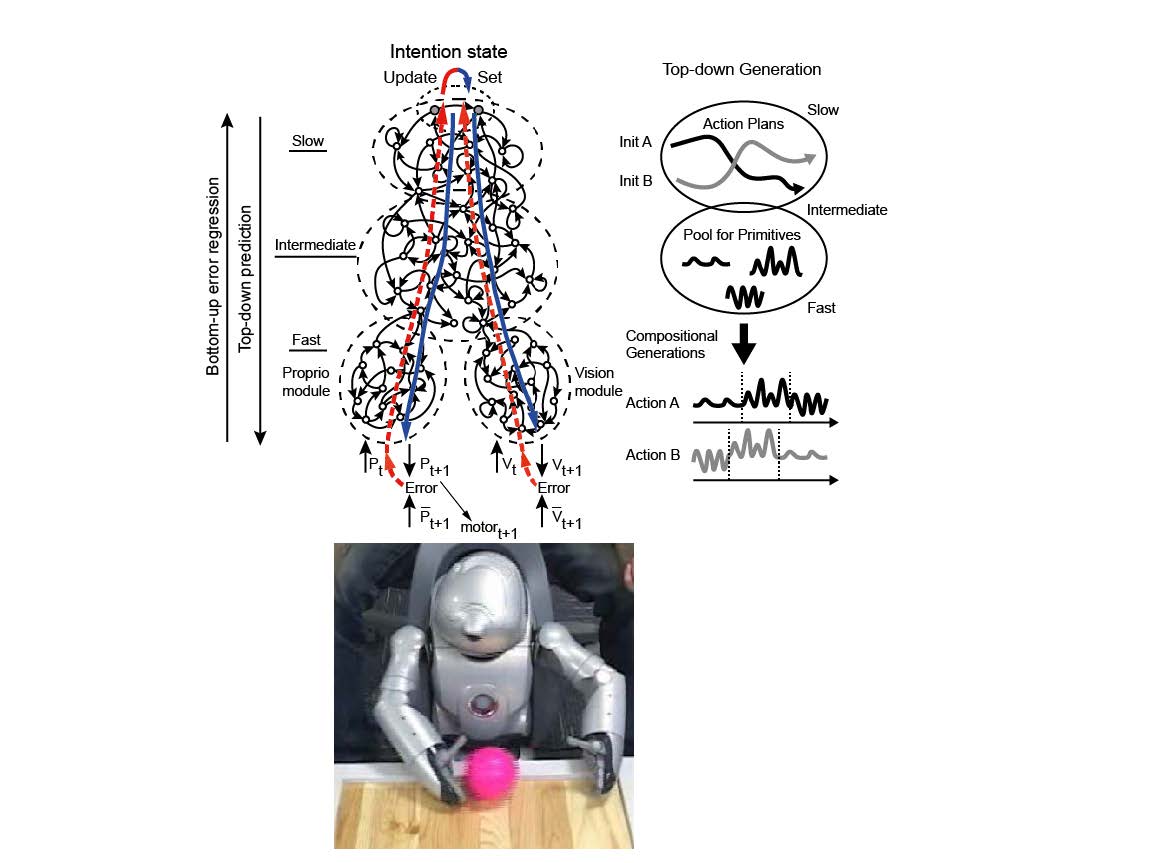

Imagine next that you are the lucky bearer of a predictive (precision-modulated) brain. Your whirring neural engines of behavior and response, if that vision is on track, are dedicated to nothing so much as the progressive reduction of salient (high-precision) forms of prediction error. To learn about the world, you learn to predict the changing plays of energy that bombard your sensory surfaces, and you lock onto worldly sources of patterns in those sensory stimulations (sources such as dogs, hurricanes, and the mental states of other agents) as a result. To move around the world you predict the plays of sensory (especially proprioceptive) stimulation that would result were you move thus and so, and reduce error with regard to those predictions by actually moving thus and so. And this rolling economy is constantly modulated by changing estimations of your own sensory uncertainty, as reflected in the changing precision-weighting of select prediction errors. You now exist as a kind of unified sensorimotor swirl, self-organizing so as constantly to reduce your own (high-precision) prediction errors. This is already a highly robotics-friendly vision. For the prediction task, running in an active embodied agent, installs a suite of knowledge that is firmly rooted in its own history of sensorimotor engagements, and that is thus especially apt for grounded sensorimotor control.

But what about sentience itself – that hard-to-pin-down feeling of stuff mattering and of truly ‘being in the world’? Here, recently-emerging work by Anil Seth and others highlights an under-appreciated feature of the total sensory stream that the agent is trying to predict. That feature is the the stream of interoceptive information specifying (via dense vascular feedback) the physiological state of the body – the state of the gut and viscera, blood sugar levels, temperature, and much much more (Bud Craig’s recent book How Do You Feel offers a wonderfully rich account of this).

What happens when a unified multi-level prediction engine crunches all that interoceptive information together with the information specifying organism-salient opportunities for action? Such an agent has a predictive grip on multi-scale structure in the external world. But that multi-layered grip is now superimposed upon (indeed, co-computed with) another multi-layered predictive grip – a grip on the changing physiological state of her own body. Agents like this are busy expecting themselves!

And these clearly interact. As your bodily states alter, the salience of various worldly opportunities alters too. Such estimations of salience are written deep into the heart of the predictive processing model, where they appear (as we just saw) as alterations to the weighting (the ‘precision’) of specific prediction error signals. As those estimations alter, you will act differently, harvesting different streams of exteroceptive and interoceptive information, that in turn determine subsequent actions, choices, and bodily states.

Your multi-layer action-generating predictive grip upon the world is now inflected, at every level, by an interoceptively informed grip on ‘how things are (physiologically) with you’. Might this be the moment at which a robot, animal, or machine starts to experience a low-grade sense of being-in-the-world? Such a system has, in some intuitive sense, a simple grip not just on the world, but on the world as it matters, right here, right now, for the embodied being that is you. Agents like that experience a structured and – dare I say it – meaningful world: a world where each perceptual moment presents salient affordances for action, permeated by a subtle sense of our own present and unfolding bodily states.

More (and Less)

There is much that I’ve left out from these posts so far – most importantly, I haven’t said nearly enough about the crucial role of action and environmental structuring in altering the predictive tasks confronting the brain: changing what we need to predict, and when, in order to get things done. That’s where these stories stand to score further points by dovetailing neatly with further bodies of work in embodied, situated, and even ‘extended’ cognition. Nor have I addressed the impressive emerging bodies of work targeting multiple agents engaged in communal bouts of ‘social prediction’.

But rather than keep pushing my positive agenda, I propose to turn (in the next and final post) towards the dark side.

Thanks to Jun Tani for the header image. Watch for his book Exploring robotic minds: Actions, symbols, and consciousness as self-organizing dynamic phenomena, now in press with OUP.

Hello again.

Speaking of the self, it seems to me that your view has a rather interesting implication for the status of self-conceptions. Because we have a kind of control over our own conduct that we don’t have over the conduct of other aspects of the world, one might expect, on the predictive coding model, that responses to prediction errors about the self will exhibit very distinctive dynamics. Here’s what I have in mind. Suppose you conceive of yourself in some way, e.g., as a father, or a professor, or a believer-that-p. Such self-conceptualizations will naturally give rise to expectations or predictions. Now suppose these are sometimes in error. It seems like in the case of self-conceptions there is always a choice as far as minimizing future error is concerned: we can revise the self-conception, but we can always just revise the disposition that led to the error. For this reason, self-conceptions, unlike conceptions about distal objects in the environment, are almost always potentially regulative. If I fail to meet expectations regarding fatherhood, or professorhood, or believerhood-that-p, rather than revising such self-conceptions, I can always revise the dispositions that led to behavioral confutations of them. This makes self-conceptions very interesting, on a predictive coding model. For one thing, we might expect them to show a kind of stability unrelated to their (independent) truth. False conceptions about the distal environment will tend to go extinct, because they lead to prediction errors. But false self-conceptions might remain stable, by turning into self-fulfilling prophecies, i.e., by leading to alterations of dispositions in truth-making directions. There might be social facts that explain the stability of such initially false, yet eventually self-fulfilling self-conceptions, e.g., playing certain social roles may be required for social success in one’s community. There might also be implications for the (quasi-Cartesian) feeling that self-conceptions are not corrigible in the way that conceptions of distal features of the environment are: it seems we can always hold onto a self-conception, even in the face of confuting data, because we can revise the dispositions that give rise to the data. “Third-person” data does not seem relevant to the status of the self-conception in the way that it is to the status of conceptions of distal objects in the environment…

Dear Tad,

That’s a fascinating speculation. I can see how it fits rather neatly with your mega-cool work on ‘mindshaping practices’ (see Tad’s “Mindshaping: A New Framework for Understanding Human Social Cognition”). In mindshaping, we alter so as (in my terminology) to present better targets for mutual prediction. In the kinds of case you here describe, we either alter our behaviour so as better to fit our own predictions, or else we alter our predictions so as better to fit our behaviour. This looks structurally identical to the two ways of reducing prediction error (PE) described in the posts. And as per my comments to Burr, I think something in the way we have already learnt to be will usually determine which of these strategies (or what admixture of the two) we prefer when confronted with salient (high-precision) PE.

Hi Andy!

Wonderful book! Excellent posts! Thanks for both!

As ever I am most intrigued by the relationship between the feeling body and predictive processing.

You write above, that the “multi-layered grip is now superimposed upon (indeed, co-computed with) another multi-layered predictive grip – a grip on the changing physiological state of her own body”. Here you use the words ‘superimposed’ and ‘co-computed’. I wonder how deeply we are to take this relationship to be?

In a previous post (responding to Christ Burr) you mentioned that ‘active inference’ most appropriately refers to the “the unified mechanism by which perceptual and motor systems conspire to reduce prediction error using the twin strategies of altering predictions to fit the world, and altering the world to fit the predictions”. The point you were making then was to highlight a common misunderstanding about the depth of the interrelationship between perceptual and active inference.

I wonder if something similar should be said about the relationship between interoceptive predictive processing and exteroceptive predictive processing? While these two terms obviously point to processes that can in some ways be seperated, there is mounting evidence that they never operate isolation (as you mention). Instead the neural processes underlaying feeling, thinking and seeing are increasingly being described as ‘co-evolving’ across multiple brain areas and at various timescales.

It is right here that I see RPP coming face to face with recent network models of the brain (eg. luiz Pessoa) that aim specifically at dissolving the boundries between cognition, emotion and perception. If thats right, then looking closely at this relationship between emotion and perception may be one way to work towards making RPP even more embodied than it is so far.

Happy to hear any ideas that emerge from this quick note.

Again, thanks for everything Andy.

Mark Miller

Hi Mark,

Great to hear from you, and thanks for the kind words too.

I agree 100% that the cognition/emotion divide is misleading at the level of many of the mechanisms involved, and that this will carry over in some way to the case of interoceptive and exteroceptive PP.

It seems likely that complex cortical/sub-cortical loops will also play a major role in binding all this into a single embodied dynamical regime.

Getting that story straight will, I believe, have major implications for the explanation of the texture, and maybe even the very possibility, of conscious experience.

Mark & Andy,

Re exteroceptive and interoceptive

I wasn’t interpreting the interoceptive stimuli stream as referring to emotion, but rather to physiological states of the body. Although I’m curious how emotion enters the PP picture, I didn’t think that’s what this post was addressing.

Thanks for the wonderful, interesting blog posts!

Thanks!

I agree that these are different.I suspect they are related issues, but laying out that story will be a large and complex task.

Andy