My last post went back to babies, to see if the dawn of mental state attribution might show us something about the relationship between knowledge and belief. Even for those who take the concept of belief to be innate or very early-developing, belief attribution is weirdly dependent on knowledge attribution (or so I hinted): for example, the dupe in the unexpected transfer task starts out knowing that the ball is in the basket and then still thinks that it is there after it is moved behind his back.

I was cutting some corners there. The debate is (sigh) actually a bit more complex, with some important researchers holding back from the idea that infants are engaged in knowledge/belief attribution, and suggesting they might be doing something weaker. Josef Perner and Johannes Roessler start with a notion of “experiential record” in which the infant doesn’t attribute full-blown propositional attitudes to people she is watching, but instead just keeps track of various pairings of observed agents, objects and events. Ian Apperly and Stephen Butterfill have a somewhat similar ground-floor notion of “registration“. They have their reasons for thinking that the infants aren’t attributing propositional attitudes, like for instance noting that the infants aren’t able to perform certain kinds of inferential transformations on the content in question, but I’m personally pretty skeptical of their arguments on this point –I like Peter Carruthers‘s line that those transformations are hard for other reasons.

What Carruthers concludes is the following: “There seems no reason to think that the early mindreading system is incapable of attributing propositional thoughts to other agents. On the contrary, since infants have propositional thoughts from the outset themselves (as Apperly, 2011, acknowledges), they can take whatever proposition they have used to conceptualize the situation seen by the target agent and embed that proposition into the scope of a ‘thinks that’ operator.” (2013, 162)

Josef Perner is also pretty gracious about saying that there might not be a huge distance between his position and the position of those who see propositional attitude attribution at the outset (he confesses he gets “dangerously close” to belief attribution). Commenting on both his and Roessler’s “experiential record” idea and on Apperly and Butterfill’s “registration” idea, he writes: “Both notions are based on distinguishing events that a person has experienced (registered, encountered, witnessed, seen, …) from those events that the person hasn’t witnessed.” (Perner 2014, 296) “The facts registered by the agent are supposed to be taken by the infant as the basis for calculating the agent’s behavior, which in effect makes the registered facts to function as the content of the agent’s belief about the current state of the world.”

And now I have to say, in my capacity as a pedantic epistemologist: “Gentlemen! I like what you are doing, but you are getting it slightly wrong!” I very much like that they are shouldering the burden of starting to explain where attributed mental content comes from, in the first place, but what’s wrong is to think that it gets picked up and placed under a “thinks that” operator, or that the first notion here is “belief” or something “belief-like”, as Apperly likes to say, with his (I think unfounded) scruples about the propositional. If infants always have to start by looking at a real event, and seeing others as also registering that this event occurred, they are starting by attributing a factive state of mind, a state of mind that agents have only to truths.

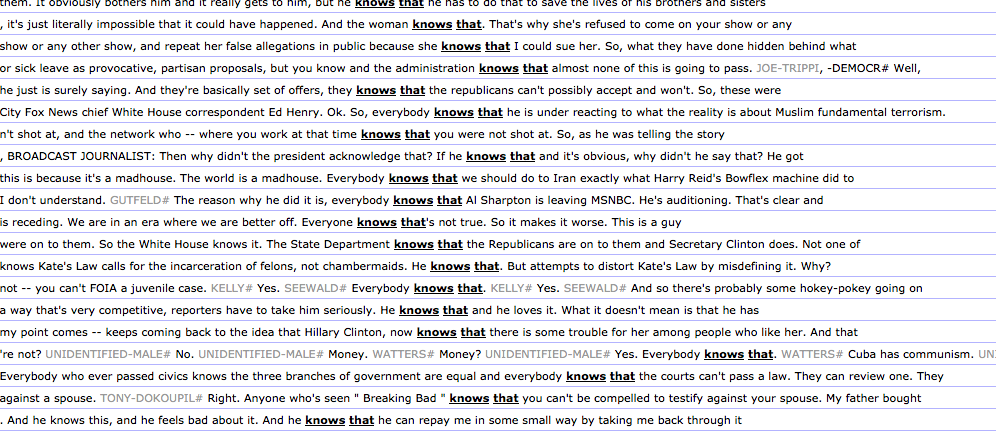

We arrive at last at the much-promised discussion of factivity. Factive expressions like “sees that”, “registers that” and “knows that” entail the truth of their complement clauses. Most philosophers use the term “factive” just to pick out that kind of entailment, for example following Williamson: “a propositional attitude is factive if and only if, necessarily, one has it only to truths.” (2000, 34). Some propositional attitudes (like “learn” and “forget”) are restricted to true complements but pick out processes rather than states. Williamson has argued that knowing is distinguished among factive mental states as the most general, as the one entailed by all the others.

Some predicates look at first like counterexamples to Williamson: they link agents only to truths and don’t entail knowing. One class of such predicates given great attention lately by linguists Valentine Hacquard and Pranav Anand: the predicates of veracity (“is right that”, “is correct that”). You don’t have to know that Governor McDonnell was taking bribes in order to be right that Governor McDonnell was taking bribes — even the person who just has totally ungrounded suspicions could turn out to be right. But if being right doesn’t entail knowing, the Williamsonian idea that all factive mental states entail knowing nevertheless turns out to be okay: Anand and Hacquard argue that “is right that” isn’t a mental state predicate. Predicates of veracity, they observe, don’t even require sentience on the part of their subjects, as they show in the contrast between acceptable sentences like (1) and unacceptable ones like (2):

(1) The report is right that McDonnell was taking bribes.

(2) *The report knows that McDonnell was taking bribes.

“The report” isn’t an agent, it’s not even sentient; “knows” has to be predicated of a sentient being, but “is right” doesn’t. What predicates of veracity are really doing is marking a contribution to the common ground of conversation, Hacquard and Anand argue, and a “repository of information” (like a book or report) can do this without having mental states. Lots of predicates (like “is tall”) can apply either to sentient or non-sentient beings; you don’t have a mental state predicate unless it applies only to the sentient (so for example “is happy” can’t apply to non-sentient beings unless you are being cute and personifying them). Okay, some mental state or other is typically presupposed when we talk about sentient creatures being right, but there’s a lot of latitude about what that state is, so “is right” itself isn’t a mental state predicate. (By the way “proves” also looks like a predicate in this general veridical class — something about what gets contributed to common ground, and not something inherently about the mental states of an agent.)

Now for a very exciting terminological point. Notice that Williamson would still call “is right” a factive predicate (albeit not a mental state one): necessarily, it takes only truths. But there’s a difference between philosophers and linguists on this point. Linguists would call “is right” a merely veridical predicate, because they use “factive” more restrictively: for them, factive predicates not only entail but also presuppose the truth of their complements. The truth of the presupposed complement shines through even when the factive verb is embedded under various logical and modal operators (see this review).

As the review explains, from

(3) Jones knows that the ball was moved.

…it follows that the ball was moved. But that background fact about the ball also typically follows from any of these:

(4) Jones doesn’t know that the ball was moved.

(5) Does Jones know that the ball was moved?

(6) Perhaps Jones knows that the ball was moved.

(7) If Jones knows that the ball was moved, he won’t look for it in the basket.

We can take special steps to cancel the presuppositions of (4-7), like saying “Jones doesn’t know that the ball was moved, because it wasn’t actually moved,” but when we don’t pull any special tricks like that, the complement comes across as true. Contrast “Winters is right that the block was moved” — that plain usage gives us the truth of the complement, but if we start embedding (“Is Winters right that the block was moved?”) the truth of the complement isn’t conveyed.

Now for an amazing result. Valentine Hacquard and Pranav Anand have discovered that when it comes to mental state predicates, any predicate that is factive in the philosophers’ sense (takes only true complements) is also factive in the linguists’ stronger sense (takes only true complements, and presupposes those complements, keeping their entailments going even under a storm of negation and modal operators). There are no mental state predicates that are merely veridical: getting it right (or true belief, or TB) is not a natural mental state label. To date, no one has explained to my satisfaction exactly why this is, and this question is what I’m working on right now.

The way I see it, factive verbs are used both positively and under negation and modals, and in questions, to track and mark the mental states of agents against a shared background reality. It seems to be important that talk of knowing holds the background facts fixed even as we reason and raise questions. I’ve been thinking that there’s a very interesting difference between asking whether Smith knows that the ball was moved and asking whether Winters is right that the block was moved (and also of course interestingly different again from asking whether Jones thinks that the bag was moved). When we ask whether someone is right that p, what matters is the truth of p; questions about what people think or believe are questions about them as agents, perhaps holding in abeyance what might be going on in reality, and questions about what people know are questions about the relationship between agents and reality. If mental state attributions start out as being about agents and reality, then this might be a clue to the special status of knowledge.

There’s a temptation sometimes to think that the default mental state attribution we are going to give others is something weaker than knowledge: so Alan Leslie and colleagues, for example, say that “because people’s mundane beliefs are usually true, the best guess about another’s belief is that it is the same as one’s own” (Leslie, Friedman et al. 2004, 528). But this doesn’t quite do justice to cases where, for example, you can see that someone is better positioned than you are on a given point (I see that where he is standing, he has a clear view of the other side of the barrier). I don’t think that his belief about what is on the other side is the same as mine; my default is that he knows what is on the other side, and I don’t even have a belief on that point.

An interesting, and cross-linguistically robust, fact about factives: core factive verbs like “know” embed questions — you can know what is on the other side of the wall, you can know who put it there, or when they did so. Nonfactives like “think” don’t do this directly. (Again, there are some complexities — Paul Egré can take you on great tour of what happens with emotive factives and interrogative verbs here.) Questions have an interesting degree of propositional structure, and the fact that they do enables us to see others as having propositional attitudes on issues we haven’t decided. Theorists who restrict themselves to thinking of non-factive fundamental states can miss this: so when Ágnes Kovács for example says that the fundamental representational vehicle is a “belief file”, she gets stuck saying that the “what’s behind the wall” case is just an “empty belief file with little or no content”, which I think falls short of capturing the kind of structure-with-openness that we are able to ascribe in this sort of case.

Anyway, the paper I’ve been trying to write lately starts out looking at the special value of factive attitudes in the onset of mental state attribution, but it pretty quickly faces the question of why we keep them going later in life. Suppose a factive attitude is a special magical thing whose very essence consists in a matching between mind and world (see Factivity: My Little Pony for details). Maybe infants have to start out by seeing other agents as having that sort of state of mind with respect to the real objects and events the agents are witnessing. But when we grow up, should we retreat to thinking that the best any agent ever has is a non-factive state of mind, a state of mind (like believing), which might or might not be true? We don’t need to be skeptical, we could follow Alan Leslie and company and say that most of the time most beliefs are true, but just have the general attitude that people are fallible, that perception and other ways of forming attitudes don’t always deliver the truth, and that the coldly sober thing to say at the end of the day is that we are all just walking around with a bunch of fallibly formed beliefs: that’s what our real mental states are like.

Why and how do adults preserve knowledge as a special, recognized state? Next time I want to look at that question, starting by looking at a computer simulation that models the onset of belief recognition.

Such a good blog piece!

Connective, clean, significant.

Thanks for this.