(See all posts in this series here.)

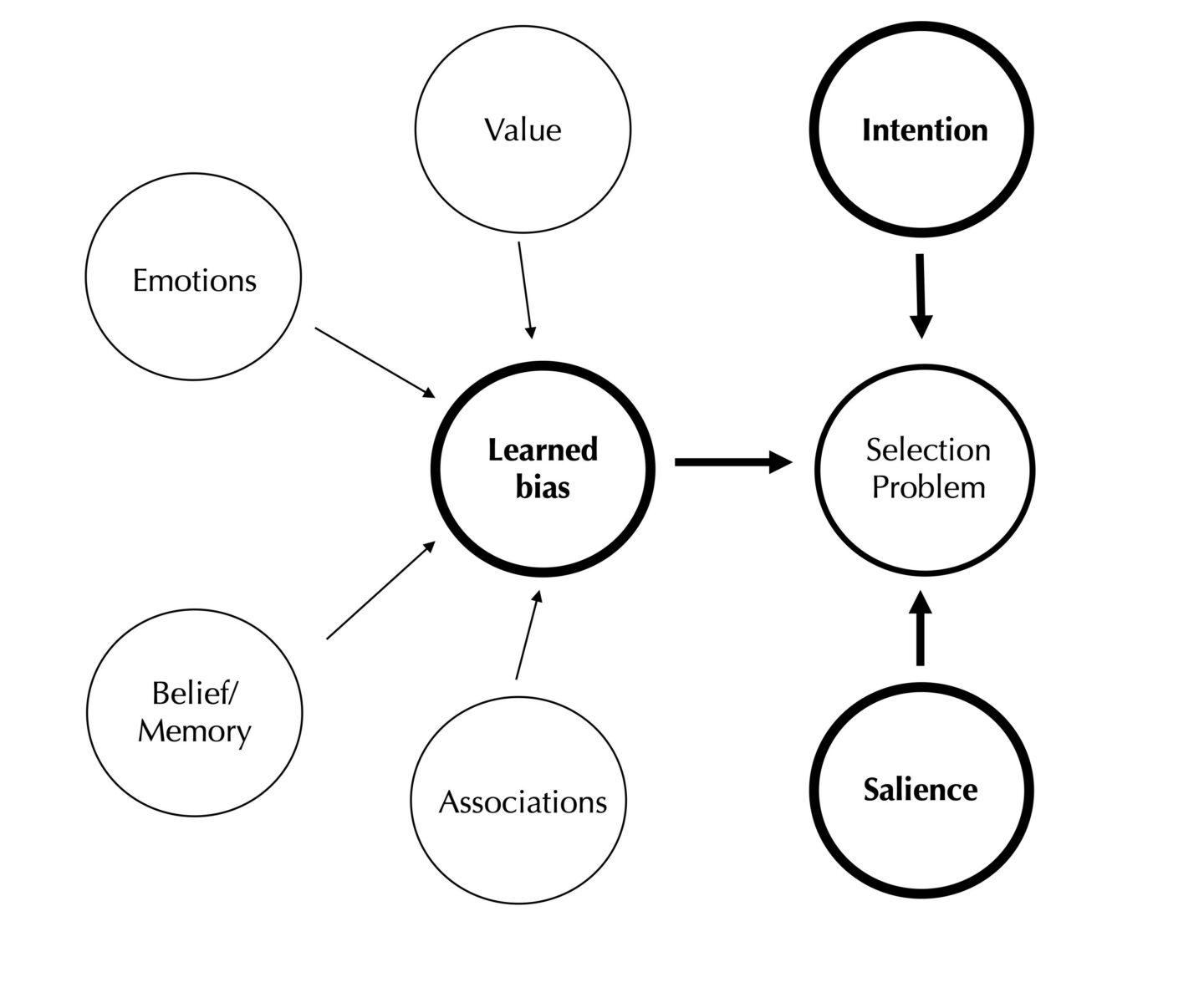

Philosophers have been debating implicit biases for some time. In Chapter 5 of MoM, I argue that automatic attention provides a scrutable type of implicit bias, scrutable because we understand well automatic attention across various domains and the automatic biases that engender it. Many of these biases are historical, due to past experience and some of them are depicted here:

Multiple biases can simultaneously contribute to solving the Selection Problem. The function of biases is defined within the Selection Problem. Biases include expectations (beliefs), values, intentions, emotions and memories as well as psychological phenomena such as priming, the processing of gist, or saliency maps. Without some bias, there is no action (Chp. 1.5).

The necessity of bias means that it is broadly applicable across all domains in which action figures. So, there are epistemically relevant biases. For example, proper scientific reasoning requires appropriate sensitivity to evidence (Chp. 5.3). I call this sensitivity, attunement (Chp. 3.4 on vigilance and fn. 2, p. 124). Consider then how we respond to data. Data is complicated, as we saw in the X-ray, in Post 2. Data might contain information speaking against one’s hypothesis. Whether one critically assesses that information depends on one’s noticing it. If one is not appropriately attuned, the data is “silent” and one will miss something important. One will fail to attend.

What makes one more likely to notice interesting but subtle data? The bias here can’t be in intention or belief since if one represented the information in question, then that would be a registering it. Yet without bias of some sort, no action, no attention, will engage. Here, it is the automaticity of bias, those learned with experience such as in training and practice, that is needed. So consider again the X-ray data. While a novice will fail to notice relevant information on an X-ray, expert radiologists are sensitive, locating an anomaly within seconds. Understanding the many biases that explain this automatic sensitivity is a large task. Given that attention is central to action and that evidence and reasons can only make a difference if we notice them, then how and why we notice them becomes an important area of investigation.

Understanding bias informs understanding expertise, a good bias, and understanding cases collated under the rubric “implicit bias”, a bad bias. Let me close with a familiar case of a bad bias (Chp. 5.6). This was evident to academics some years ago when many departments instituted a rule during Q&As, something like: the first few questions will go to graduate students. The reason for this rule (there may be other reasons) was to ensure that graduate student voices were heard. Think of this as a rule for the distribution of a limited epistemic good, question slots. Equitable distribution is a demand of epistemic fairness.

The rule was a response to a type of epistemic injustice in which faculty were prioritized over others, including students. Given that most moderators did not intentionally exclude students, that being an explicit bias, this exclusion was automatic as we’ve defined. What then biases what is effectively a visual search task (look for hands).

Here, I can only offer a proposal, for the question is empirical. Various structures in our profession and in training tend to elevate some groups over others, to prioritize them, accord them higher value or status. These structures are learned and ingrained to set biases in members of the profession. One relevant bias is that linked to value. The skew in whose books or articles are recommended, whose works appear in syllabi, who appears on a list of conference speakers, can signal a type of disciplinary imprimatur on certain individuals or classes of individuals establishes a type of valuation, one that can be learned implicitly. This value can bias search, decision and action.

I do not claim that this is the bias that explains why we end up with an unequal distribution of epistemic resources in the profession. In the Q&A case, there are also neutral biases that can affect who gets called on such as a bias given spatial proximity (I know that if I want to ask a question, I should sit up front). There can be many sources the sum total of which leads to a negatively biased outcome. Whatever these automatic, implicit biases are, they required an intention as counterbias.

Two final points. First, while fairness often requires an intention to counterbias, what we aim for is to automatize fairness. The extant biases against which the intention pushes are themselves automatic. What is then required is to push the agent’s attentional capacities (her attunement) to biases that are fair, that is attention that will inform proper action. It is unclear how to understand what proper attunement would be without understanding what the biases in play are, and these we cannot discover from the armchair. Second, with respect to a number of implicit biases, I think we can understand them as driven by automatic attention and where this is so, we have the full apparatus of the theory of attention at our disposal to aid investigation. This allows us to explain the biases in terms of mechanisms that we understand and can precisely manipulate in the lab and, hopefully, in concrete proposals for amelioration. We are not left to debating the relevant factors as we in other cases of implicit bias. As the figure above indicates, we know many of the biases on attention and how they work. If we wish to deeply understand and address certain implicit biases, focusing on attention promises rich and productive understanding.

Pingback: Miscellaneous Links #39 – MetaDevo