In The Embodied Mind, we presented the idea of cognition as enaction as an alternative to the view of cognition as representation. By “representation” we meant a structure inside the cognitive system that has meaning by virtue of its corresponding to objects, properties, or states of affairs in the outside world, independent of the system. By “enaction” we meant the ongoing process of being structurally and dynamically coupled to the environment through sensorimotor activity. Enaction brings forth an agent-dependent world of relevance rather than representing an agent-independent world. We called the investigation of cognition as enaction the “enactive approach.”

In formulating the enactive approach, we drew on multiple sources—the theory of living organisms as autonomous systems that bring forth their own cognitive domains; newly emerging work on embodied cognition (how sensorimotor interactions with the world shape cognition); Merleau-Ponty’s phenomenology of the lived body; and the Buddhist philosophical idea of dependent origination, specifically the Madhyamaka version, according to which cognition and its objects are mutually dependent.

Since the The Embodied Mind was first published, the enactive approach has worked to establish itself as a wide-ranging research program, ranging from the study of the single cell and the origins of life, to perception, emotion, social cognition, and AI. The foundational concept of the enactive approach, and the one that ties together the investigations across all these domains, is the concept of autonomy.

Varela, in his 1979 book, Principles of Biological Autonomy, presented autonomy as a generalization of the concept of autopoiesis (cellular self-production). The concept of autopoiesis describes a peculiar aspect of the organization of living organisms, namely, that their ongoing processes of material and energetic exchanges with the world, and of internal transformation, relate to each other in such a way that the same organization is constantly regenerated by the activities of the processes themselves, despite whatever variations occur from case to case. Varela generalized this idea by defining an autonomous system as a network of processes that recursively depend on each other for their generation and realization as a network, and that constitute the system as a unity. He applied this idea to the nervous system and the immune system, and he hinted at its application to other domains, such as communication networks and conversations.

Here’s how we introduced this idea in The Embodied Mind, just after quoting a passage from Marvin Minsky’s, The Society of Mind, in which he writes, “The principal activities of brains are making changes in themselves” (p. 288):

“Minsky does not say that the principal activity of brains is to represent the external world; he says it is to make continuous self-modifications. What has happened to the notion of representation? In fact, an important and pervasive shift is beginning to take place in cognitive science under the influence of its own research. This shift requires that we move away from the idea of the world as independent and extrinsic to the idea of a world as inseparable from the structure of these processes of self-modification. This change in stance… reflects the necessity of understanding cognitive systems not on the basis of their input and output relationships but by their operational closure. A system that has operational closure is one in which the results of its processes are those processes themselves. The notion of operational closure is thus a way of specifying classes of processes that, in their very operation, turn back upon themselves to form autonomous networks… The key point is that such systems do not operate by representation. Instead of representing an independent world, they enact a world as a domain of distinctions that is inseparable from the structure embodied by the cognitive system” (pp. 139-40).

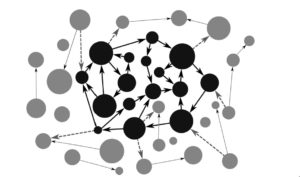

In recent work, Ezequiel Di Paolo and I define an autonomous system as an operationally closed and precarious system. We can use this Figure to depict the basic idea (what follows borrows from this book chapter with Ezequiel).

The circles represent processes at some spatiotemporal scale being observed by the scientist. Whenever an enabling relation is established, the scientist draws an arrow going from the process that is perturbed and the process that stops or disappears as a consequence. An arrow going from process A to process B indicates that A is an enabling condition for B to occur. Of course, there may be several enabling conditions. We don’t assume that the scientist is mapping all of them, only those that she finds relevant or can uncover with her methods.

As the mapping of enabling conditions proceeds, the scientist makes an interesting observation. There seems to be a set of processes that relate to each other with a special topological property. These processes are marked in black in the figure. If we look at any process in black, we observe that it has some enabling arrows arriving at it that originate in other processes in black, and moreover, that it has some enabling arrows coming out of it that end up also in other processes in black. When this condition is met, the black processes form a network of enabling relations; this network property is what we mean by operational closure.

Notice that this form of “closure” doesn’t imply the independence of the network from other processes that aren’t part of it. First, there may be enabling dependencies on external processes that aren’t themselves enabled by the network; for example, plants can photosynthesize only in the presence of sunlight (an enabling condition), but the sun’s existence is independent of plant life on earth. Similarly, there may be processes that are made possible only by the activity of the network but that do not themselves “feed back” any enabling relations toward the network itself. Second, the arrows don’t describe any possible form of coupling between processes, but rather only a link of enabling dependence. Other links may exist too, such as interactions that have only contextual effects. In short, an operationally closed system shouldn’t be conceived as isolated from dependencies or from interactions.

Notice too that although the choice of processes under study is more or less arbitrary and subject to the observer’s history, goals, tools, and methods, the topological property unraveled isn’t arbitrary. The operationally closed network could be larger than originally thought, as new relations of enabling dependencies are discovered. But it’s already an operationally closed network by itself and this fact can’t be changed short of its inner enabling conditions changing, that is, short of some of its inner processes stopping.

A living cell is an example of an operationally closed network. The closed dependencies between constituent chemical and physical processes in the cell are very complex, but it’s relatively easy to see some of them. For example, the spatial enclosure provided by a semi-permeable cell membrane is an enabling condition for certain autocatalytic reactions to occur in the cell’s interior, otherwise the catalysts would diffuse in space and the reactions would occur at a much different rate or not at all. Hence there is an enabling arrow going from the spatial configuration of the membrane to the metabolic reactions. But the membrane containment isn’t a given; the membrane is also a precarious process that depends, among other things, on the repair components that are generated by the cell’s metabolism. So there is an enabling arrow coming back from metabolic reactions to the membrane. Hence we have already identified an operationally closed loop between these cellular processes. If the scientist chose not to observe the membrane in relation to the metabolic reactions, she would probably miss the topological relation between them. Operational closure—cellular life in this case—can be missed if we choose to put the focus of observation elsewhere, but it isn’t an arbitrary property if we observe it at the right level.

Given how we’ve defined operational closure, various trivial examples of such closure may exist. For example, in cellular automata, the regeneration of an equilibrium state in each cell mutually depends on the equilibrium state in others, making the dependencies into a closed network.

We need an additional condition to make operational closure non-trivial, and this condition is that of precariousness. Of course, all material processes are precarious if we wait long enough. In the current context, however, what we mean by “precariousness” is the following condition: In the absence of the enabling relations established by the operationally closed network, a process belonging to the network will stop or run down. A precarious process is such that, whatever the complex configuration of enabling conditions, if the dependencies on the operationally closed network are removed, the process necessarily stops. In other words, it’s not possible for a precarious process in an operationally closed network to exist on its own in the circumstances created by the absence of the network.

A precarious, operationally closed system is literally self-enabling, and thus it sustains itself in time partially due to the activity of its own constituent processes. Moreover, because these processes are precarious, the system is always decaying. The “natural tendency” for each constituent process is to stop, a fate the activity of the other processes prevents. The network is constructed on a double negation. The impermanence of each individual process tends to affect the network negatively if sustained unchecked for a sufficient time. It’s only the effect of other processes that curb these negative tendencies. This dynamic contrasts with the way we typically conceive of organic processes as contributing positively to sustaining life; if any of these processes were to run unchecked, it would kill the organism. Thus, a precarious, operationally closed system is inherently restless, and in order to sustain its intrinsic tendencies towards internal imbalance, it requires energy, matter, and relations with the outside world. Hence, the system is not only self-enabling, but also shows spontaneity in its interactions due to a constitutive need to constantly “buy time” against the negative tendencies of its own parts.

The simultaneous requirement of operational closure and precariousness are the defining properties of autonomy for the enactive approach. It’s this concept that enables us to give a principled account of how the living body is self-individuating—how it generates and maintains itself through constant structural and functional change, and thereby enacts its world. When we claim that cognition depends constitutively on the body, it’s the body understood as an autonomous (self-individuating) system that we mean. It’s this emphasis on autonomy that differentiates the enactive approach from other approaches to embodied cognition. It’s also what differentiates the enactive approach in our sense from the approaches of other philosophers who use the term “enactive” but without grounding it on the theory of autonomous systems.

— Featured Image Credit for this post: Tobias Gremmler.

“Physics is Behavioural Science of Matter”

The above paradigm shift is essential to understand reality correctly.

It is not Physics that give behaviour (properties) to matter instead it is behaviour of matter that give Physics.

https://www.linkedin.com/pulse/consciousness-simple-easy-shaikh-raisuddin?_mSplash=1

Evan,

Thanks for this helpful post.

“By “enaction” we meant the ongoing process of being structurally and dynamically coupled to the environment through sensorimotor activity.”

So far so good. I don’t know anyone who has ever disagreed that the neurocognitive system is structurally and dynamically coupled to the environment through sensorimotor activity. This is a well known fact.

“Enaction brings forth an agent-dependent world of relevance rather than representing an agent-independent world.”

I probably don’t understand what you mean by “brings forth an agent-dependent world,” which has puzzled me ever since I first read The Embodied Mind and other work by Varela. It sounds either like idealism or like a conflation between internal representations and external world; I’ll just ignore that expression and focus on enaction qua coupling to the environment through sensorimotor activity.

How does the cognitive system couple itself to the environment through sensorimotor activity in its exquisitely adaptive and effective way? Thanks to an internal model of its body and environment that it constructs and uses to drive its behavior. This is representation. This is the mainstream cognitive neuroscientific explanation of “enaction,” and there is plenty of evidence to support it. I’m not aware of any even moderately successful alternative explanation.

(People sometimes bring up Brooks’s robots from the 1980s as an alternative framework. Well, some of Brooks’s robots were still representational, although in a rather impoverished way; and in any case, their behavior was nowhere near the level of behavioral sophistication of complex organisms. That’s why today’s robots, which incorporate Brooks’s lessons and are way more powerful than Brooks’s, use the best representations they can construct.)

Why would you or any other enactivists present enaction as an alternative to representation, rather than as a straightforward phenomenon that calls for a representational explanation? I’ve encountered this anti-representationalist rhetoric over and over again and I still don’t get this.

This concerns me a lot too Gualtiero. If you read the neuroscience literature, it seems that representations are helpful for explaining the tight coupling between environment and behavior. The dynamical and representational are not in tension.

(By sensory representations I refer to the ‘internal maps by means of which we steer’ (Dretske), or more precisely, sensory-information-bearing states interleaved between stimulus and behavior, states that are used to guide behavior wrt to said stimuli).

If you dig deeply, many of the anti-representationalists are actually ersatz-antirepresentationalists. For instance, you will find in Noe and O’Regan, buried in their putatively anti-representationalist anthem (Sensorimotor account of vision), in response to commentators:

“We are firmly convinced – and the data cited by the commentators provide proof – that the visual system stores information; and that this information […] influences the perceiver’s behavior and mental states either directly or indirectly. If this is what is meant when it is insisted that perceiving depends on representations, then we do not deny that there are representations” (p 1017).

This is basically them being representationalists, but their response is a little weird because of their “insisting on” language (they are the ones making the unusual claim, after all). I think the right response is simply: “Yes we are only attacking certain species of representationalism [e.g., LOT, or whatever].”

I’d be curious where Thompson falls on this: you defines ‘representation’ in an idiosyncratic enough way (‘structure inside the cognitive system that has meaning by virtue of its corresponding to objects’) that I could see you being fine with neural definitions, so for instance the retinal ganglion cells represent the visual world.

https://www.academia.edu/3781186/Neural_Representations_Not_Needed_No_More_Pleas_Please

I’m sorry for taking so long to respond to these comments; I only just noticed them now (somehow I missed them before).

“Brings forth an agent-dependent world” doesn’t mean idealism, nor does it conflate internal representations and the outside world. The point is that the world of a cognitive system needs to be understood at a spatiotemporal scale and conceptual level of analysis that is system-relative, where this means that the system contributes to the constitution of that world. This is analogous to the biological idea of a niche and Gibson’s idea of an ecological level for perception.

I think that “representation” is the most over-used word in cognitive science. Sometimes it means something in the brain that structurally and causally covaries with something else—as in the case of retinal ganglion cell firings and visual stimuli, or topographic V1 neurons and the retinal array. Of course, I don’t object to this idea of causal/structural covariance. But using words like “representation” or “model” or “information” here is confusing, because it runs the risk of conflating causal/structural covariance with meaning. Any semantic use of representational talk that’s meant to be explanatory, and not merely as-if talk, requires a semantic theory for representations. It’s rare that we see “representation” used in a way that makes its meaning rigorously specified in terms of a clear statement of what is the representational vehicle or format, what is the representational content or semantics, what is the function that takes us from brain activity to representational vehicle, and what is the function that takes us from representational vehicle to meaning. Although theorists talk in terms of representations all the time, there are very few theories of how meaning is supposed to be coded in the brain that would make this talk rigorous.

So, until someone comes along with such a theory, the idea that representation explains enaction seems to me to be vacuous.

Thanks, Evan.

By “the system contributes to the constitution of that world,” do you mean that the system causes changes in its environment which in turn make a difference to what is perceived? I have no objection to that, which is perfectly compatible with representationalism.

On theories of representational content, what’s your objection to mainstream informational teleosemantic theories a la Dretske, Millikan, etc.? For instance, what is wrong with the following: a neural state represents that P if and only if (i) it carries natural semantic information that P and (ii) its function is to carry natural information that P so as to guide the organism in response to the fact that P.

I don’t think the above theory of content solves all the problems, but something along those lines seems to be a fairly adequate account of the content of perceptual neural representation.

Thanks for the follow up, Gualtiero.

Bacterial chemotaxis would be one example of what I mean by “the system contributes to the constitution of that world.” Bacteria swim up and down chemical gradients by sensing and adjusting themselves to changes in the rates of concentration of various kinds of molecules; in this way, they actively change their own boundary conditions and adapt themselves to those changes, while registering those changes as advantageous versus disadvantageous. But that some kinds of molecules (e.g., sucrose, aspartate) are attractants and other kinds of molecules (e.g., heavy metals) are repellents is a function not just of the physiochemical properties of the molecules but also of the bacterial cell as a self-maintaining (autopoietic) system. On the one hand, there are the structural properties of physiochemical processes—molecules forming gradients, traversing cell membranes, and so on. On the other hand, there is the constitution of a niche in which the biological significance of molecules as attractants or repellents is constituted by the bacterial cell as a metabolic system. So it’s not just that the bacteria cause changes in their environment that in turn make a difference to what they detect; it’s that the niche they inhabit—in which certain kinds of molecules have the significance they have—is constituted by the bacteria.

I can’t do justice to your question about teleosemantic theories of content here. In brief, these theories require that functional ascription be determinate in ways that I think are problematic. I think the conception of natural selection they presuppose is problematic too. So the problems for me mainly have to do with the condition (ii) in your definition. I realize more needs to be said, but that would take more than is possible here.