This final post addresses an obvious puzzle: why is reflective thinking sensory based? We can, after all, think about all sorts of abstract nonsensory topics. We think about God, the size of the universe, the mental states of other people, the validity of arguments, arithmetical facts and other mathematical entities, and so on and so forth. Why is our conscious thinking about such matters constrained to take place in a sensory-based imagistic format? After all, when artificial intelligence designers create systems that can reason about such matters, they do not use a sensory-based system to do so. On the contrary, the representations they design to be involved in such processes are just as amodal as the subject-matters those representations are about. So why are we so different?

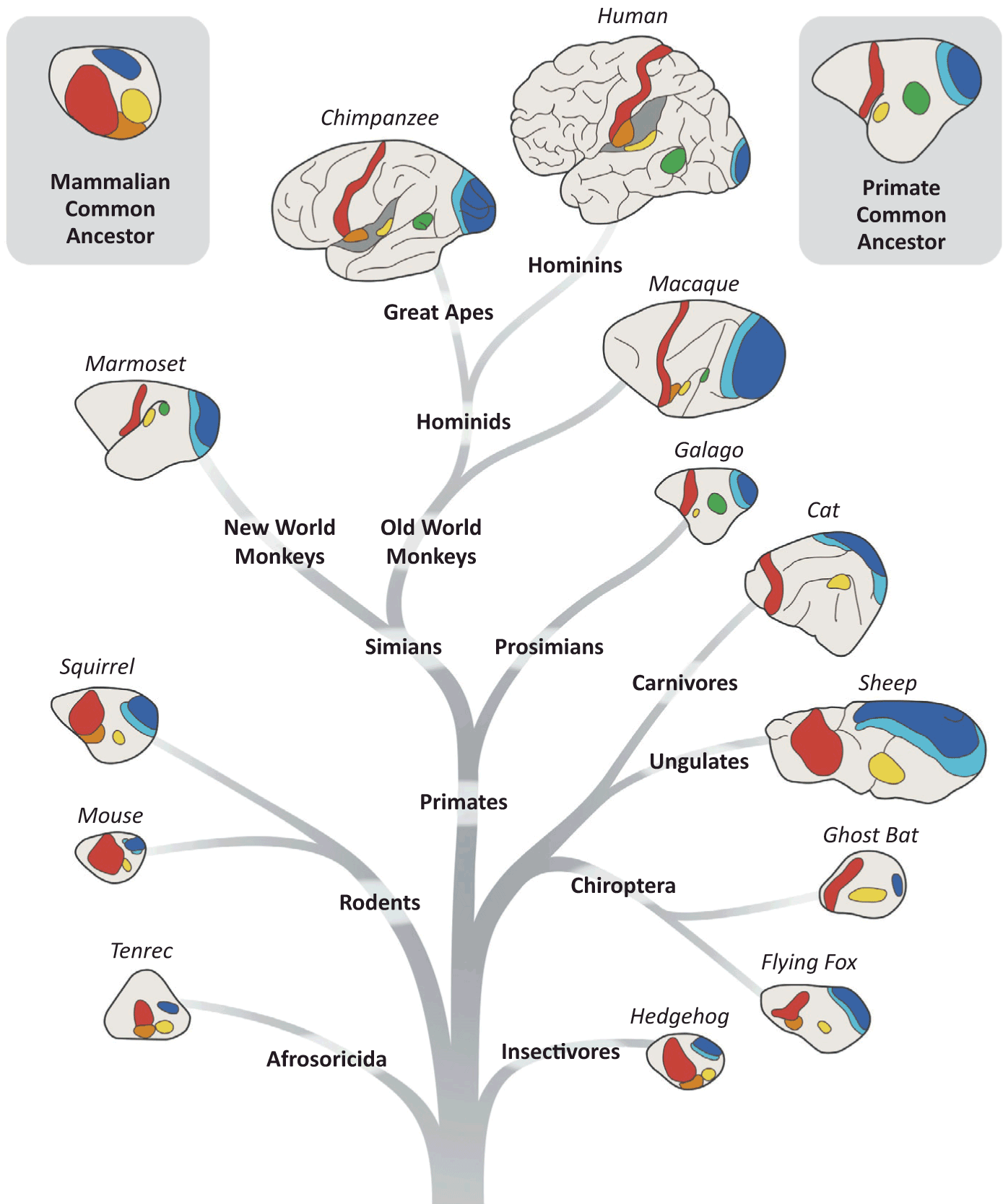

The answer I propose is an evolutionary one. We are constrained to use a sensory-based working-memory system for conscious thinking for the same basic reason that we are constrained to be four-limbed creatures. Both properties are too deeply built into the body plans of mammals and birds (and perhaps all vertebrates) for evolution to have been capable of providing any other solution. Suppose that human life-ways had evolved to require the use of three hands, forcing cooperation between two or more people for day-to-day survival. It would be natural to wonder in such circumstances why humans had not evolved a third arm. But in this case the answer would be plain: such a radical change in body-plan could not appear suddenly in the human lineage. The answer is the same, I suggest, for sensory-based thinking.

We know that basic brain architectures, just like skeletal structures, are highly conserved across species. And we know that all mammals have attentional networks homologous with those of humans. Indeed, even birds have attentional networks that are partly homologous to ours. This is likely so for good reason. All creatures face a version of the same problem, which is to select, from among the deluge of information available to them, some smaller set of items for deeper processing. The upshot is the “centered mind”, in which attended items are globally broadcast for a wide range of different systems to process and respond to, thereby coordinating the activity of those systems (and hence the organism as a whole) around a single focus.

We also know that many mammals and birds not only have the capacity to sustain and manipulate representations in working memory, but that many species make use of these capacities for planning and problem solving. The same attentional network that was initially designed to select perceptual items for global broadcasting was adapted to sustain those representations in the absence of a stimulus (as when an object disappears behind an occluder), and to activate representations from memory into the global workspace as well. Moreover, many species seem capable of mentally rehearsing potential actions, attending to the sensory forward models that result, and evaluating the likely consequences in advance of acting. In most cases what are manipulated in working memory are representations of perceptually-available objects and properties, however, such as how the water level in a tube would change if one dropped a sequence of stones into it. When humans (and to some degree other primates) started to use their working-memory systems to reflect on more abstract matters, then, they were constrained to use sensory-based representations (visual images or inner speech, for examples) in order to do it.

These points tie nicely back to the topic of my last post. It has often been claimed that System 2 reflective reasoning is uniquely human. But this is mistaken. Prospective reasoning, in particular (in which one mentally rehearses the actions open to one and responds affectively to the results), is employed by many different creatures besides ourselves. What is different about humans is that we have vastly more concepts that can be bound into the sensory-based contents of the global workspace, and that (being uniquely capable of speech) we can rehearse speech actions to guide and control our reflections. We may also be unique in making chronic use of the working-memory system in mindwanding, which for us is our “default mode” (though this has yet to be established). Moreover, humans are unique in acquiring norms of reasoning from their culture, and constraining their working-memory processes accordingly.

There is one aspect of conscious thinking that is not sensory based. That is our thoughts about the volumetric space in which we are the perspectival locus of origin. This is because we have no sensory apparatus for detecting the global space in which we live.

Arnold, you misunderstand the sense in which I claim that conscious thinking is sensory-based. I do not claim that all components of conscious thought require a sensory origin, or anything like that. Quite the contrary, I think that there is lots of innate structure to experience, and I also think there are a range of amodal (non-sensory) innate concepts or conceptual primitives. The claim is just that these non-sensory representations can’t enter working memory and become globally available unless bound into a sensory representation (whether perceptual or imagistic).

Peter, how then can you explain the hallucinated objects in the SMTT experiments? These non-sensory representations must enter working memory because they can be recalled/described in significant detail.

Arnold, I think this is the same point again. In your “seeing more than is there” experiments, what the person sees goes beyond the stimulus presented at a given moment. (If I understand it right, it is constructed by the visual system from multiple snapshots.) But the result is still a (conscious experience of a) visual representation of an object. That is all I mean by sensory-based. “Sensory-involving” might be better, if you like. The claim is not that all conscious content is reliably grounded in the input. It is that all consciousness constitutively involves a sensory component.

The point that I am making is that there can be no sensory component to conscious experience without *first* having a non-sensory egocentric brain representation of the volumetric space in which one exists. This is subjectivity, the fundamental prerequisite of all conscious sensory experience. So consciousness precedes sensory components even though our conscious experience is normally full of sensory components.

Arnold –

The content of the Retinoid System’s representation of the surround presumably is sensory based, so you’re only addressing the RS’s representation of the surround itself, right? And as I understand the RS, planes of autaptic cells constitute the model of the surround (as “seen” by I!). But until those planes are populated as a result of sensory input, it seems a bit misleading to call that model a “representation” of the surround from the perspective of I! (somewhat like calling a canvas a representation of a scene from the perspective of the painter prior to any paint having been applied). In which case the disconnect between you and Peter would seem to be merely terminological.

Charles: “The content of the Retinoid System’s representation of the surround presumably is sensory based”

No. This a key point. Our fundamental brain representation of a volumetric surround (the world we live in) cannot be sensory based because we have no sensory organs that can detect the space around us. The sheer brain representation of a volumetric space surrounding a fixed locus of perspectival origin (our self locus) must be an innate evolutionary endowment that, in retinoid theory, constitutes creature consciousness. Elaborations of sensory and cognitive mechanisms contribute to the richness and power of conscious thought.

I should add that that it is not misleading to call the model a representation of the surround from the perspective of I!, because the space surrounding the self locus (I!) has a left side, a right side, up and down, near and far, etc. sensory content projected into retinoid space is located in these egocentric terms.

I actually understand all that, Arnold. I’m just suggesting that like me, others may have difficulty thinking of an I!-centric model/replica/whatever of empty space – even though referenced to the orientation within that space of an organism not currently undergoing sensory stimulation – as a “representation” of that space.

As best I can tell, “representation” – like many words in this discipline – isn’t so precisely defined that in every situation there’s likely to be consensus on whether something is one or not.

Charles, that is why it is so hard for some to understand what the primitive state of consciousness is like.