Conceptual pluralism is the view for any category that we can think about, we typically possess many different concepts of it—that is, many different ways of representing that category in higher cognition. Different kinds of concepts encode their own specific perspective on the target category, and each one can be deployed in a host of interconnected cognitive tasks.

A challenge for pluralism is explaining why, given that concepts display this kind of polymorphism, we should think that there is anything interesting to say about them in general, from the standpoint of psychological theorizing. Doesn’t the term “concept” become just a label for this disunified and unsystematic grab-bag of representations?

I’d like to approach this topic by tracing out some connections between theories of concepts and some related ideas in the psychology of memory. The links between these two fields are worth exploring insofar as they hint at some wider generalizations about the adaptive nature of cognition.

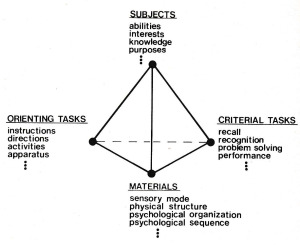

In his 1979 article “Four Points to Remember: A Tetrahedral Model of Memory Experiments,” James Jenkins proposed that we think of experimental paradigms as having four independently manipulable components: subject population, to-be-remembered materials, orienting or encoding task, and criterial or retrieval task. As the paper’s title suggests, when diagrammed these form the vertices of a tetrahedron that gives us an abstract space of possible experiments to explore:

Relationships between particular values of these component variables are often stated without qualification, as if they reflected universal facts about the operation of memory processes. Jenkins’ model suggests, however, that such generalizations should always be implicitly qualified or hedged by reference to all the other components. Claims about how this particular subject population performs when manipulating these materials in this task context may not hold if other factors about the situation are perturbed.

As I read Jenkins, then, he is advancing a form of contextualism: memory generalizations are highly dependent on the total character of the experimental setup. They are situationally circumscribed and always potentially fragile.

To illustrate this, consider the emergence of the transfer-appropriate processing (TAP) framework (Morris, Bransford, & Franks, 1977; Roediger, Buckner, & McDermott, 1999). This occurred during the heyday of the levels of processing model, which posited that whether an item is remembered is primarily determined by the depth to which it is processed or the degree of elaboration it undergoes, so that basic perceptual analysis results in less recall than more complex conceptual or semantic encoding.

Against this, the TAP framework claims that whether a particular item is remembered depends not on depth of processing, but instead on whether there is a fit between the encoding and retrieval tasks. So while in some conditions phonological properties of verbal materials are poorly retained relative to their semantic properties, this relationship can be reversed by modifying these conditions.

This nicely illustrates Jenkins’ point. Memory performance is enmeshed in a complex web of factors that need to be carefully teased apart and manipulated. Conceptual pluralism holds that the same is true of human categorization. The perspective taken on a category and how information about it is retrieved and processed are sensitive to a range of contextual parameters, and this gives rise to the diversity of experimental results that show the effects of structures such as prototypes, exemplars, causal models, ideals and category norms, and so on.

An upshot of contextualism is that laws of categorization and memory that hold with complete generality may be thin on the ground (see Roediger, 2008, where this is explored in depth). And this is the eliminativist’s cue to pounce.

Standard eliminativist arguments run as follows: a class of things forms a kind (or a natural kind) when there is a sufficiently large and interesting body of inductively confirmable generalizations about its members. But there is no such body of generalizations covering all concepts whatsoever, irrespective of their form. So concepts are not a kind, and they will have no place in our best psychological science (Machery, 2009).

Versions of this argument often appeal to specific notions of natural kinds such as Richard Boyd’s homeostatic property cluster theory—Griffiths (1999) seems to be the point of origin for this maneuver—but these substitutions don’t affect its basic logic. So why not embrace eliminativism and split concepts into subkinds?

For one thing, I’ve increasingly come to doubt whether the notion of a natural kind deployed here is actually that useful in understanding scientific theories, models, and practices, for reasons that Ian Hacking (2007) lucidly explains. I’m especially suspicious of the idea that the sciences only deal in natural kinds, or that their vocabulary ought to be purged of terms that are not kind-referring. This wildly strong claim has often been stipulated, but never, as far as I can tell, been shown to hold in any level of detail with respect to the practices of any specific science.

What does seem correct is that there are what Hacking, following Nelson Goodman, calls “relevant kinds”—classifications that hold greater or lesser value relative to some established ends and practices within a field. My own notion of functional kinds is meant to be a way of spelling out one way in which a category can be relevant, namely by playing a role in a number of well-confirmed models. So the question is whether the conceptual/nonconceptual distinction is a relevant one in psychology.

Suppose we followed the eliminativist and agreed to keep talk of representations such as prototypes, causal models, and the rest, but declined to use any general term grouping them together by their common function in higher cognition. A few distinctions that we might reasonably want to make immediately become inaccessible.

First, it seems contingent that humans have these particular representations in their higher cognitive toolkit. We might have (or develop) others, and nonhuman cognizers might have their own proprietary ways of representing categories. The list of ways that creatures might conceptualize the world seems to be quite open-ended. It’s a familiar point that if animals have concepts, they certainly need not resemble human concepts in their form and content. For that matter, it’s perfectly coherent that there could be nonhuman creatures that reason and represent the world using an utterly foreign sort of inner code. Without reference to their common functional role in higher cognition, however, we lack an architectural framework for even stating the similarities and differences between human and nonhuman concepts.

The same taxonomic problems arise within human cognition itself. Prototypes and exemplars have been invoked to explain both visual object recognition and categorization in higher cognition. That is, they play roles both in perceptual processing and in conceptual thought. Reference only to types of representations cannot capture this functional distinction. Adding the qualifier “perceptual” or “conceptual” would only reintroduce the distinction, and also invite more general questions about what separates those representations labeled conceptual from those labeled non-conceptual.

This seems more than sufficient to establish relevance. Of course, a contextualist theory of concepts also has its own explanatory burden to discharge. It needs to give an account of why in one sort of context this particular kind of process is invoked, and why variations in parameters of the context promote the use of alternate modes of processing. If these variations are systematic, there must be some cognitive apparatus that pairs circumstances with types of representations for the purposes of certain higher cognitive tasks. The existence of this web of contextually parameterized generalizations is itself a fact that stands in need of explanation.

What this means is that a pluralist, contextualist theory of concepts takes as its subject matter the properties of the architecture of higher cognition that enable it to select and deploy information for a particular kind of processing given the right inner and outer circumstances. Such a theory aims to capture the adaptive structure of the conceptual system. In my next post, I’ll sketch some proposals about how to understand this structure.

References

Griffiths, P. E. (1997). What Emotions Really Are. Chicago, IL: University Of Chicago Press.

Hacking, I. (2007). Natural kinds: Rosy dawn, scholastic twilight. Royal Institute of Philosophy Supplement, 203–239.

Jenkins, J. (1979). Four points to remember: A tetrahedral model of memory experiments. In L. S. Cermak and F. I. M. Craik (Eds.) Levels of Processing in Human Memory (pp. 429–446). Hillsdale, NJ: Erlbaum.

Machery, E. (2009). Doing Without Concepts. Oxford, UK: Oxford University Press.

Morris, C. D., Bransford, J. D., & Franks, J. J. (1977). Levels of processing versus transfer appropriate processing. Journal of Verbal Learning and Verbal Behavior, 16(5), 519–533.

Roediger, H. L. (2008). Relativity of remembering: why the laws of memory vanished. Annual Review of Psychology, 59, 225–54.

Roediger, H. L., Buckner, R. L., & McDermott, K. B. (1999). Components of processing. In J. K. Foster & M. Jelicic (Eds.), Memory: Systems, Process or Function? (pp. 31-65). Oxford, UK: Oxford University Press.

All of these are genuinely exciting ideas. But how about eliminativism as a prudential ideal? Rather than seeking to conserve concepts (even the concept of concepts), we need to recall the radically heuristic nature of our attempts to understand our behaviour, and how psychology in particular, can only interact with the complexities of the brain, via modes that almost entirely neglect what we now know to be actually going on.

In ecological rationalist terms, it seems pretty clear that what Jenkins is describing is the heuristic nature of ‘psychological functions,’ how they solve problems given the information structure belonging to some problem ecology. The so-called ‘gaze heuristic’ is my favourite example. As it turns out, professional fielders catch fly balls not so much by unconsciously calculating velocity, trajectory, and intervening factors but by fixing the ball in their visual field and simply running towards it. Call this a ‘lock into’ strategy. Rather than solve the system from afar via brute calculation, they solve it by making themselves part and parcel of that system: lock onto the ball, and let it guide you to where you need to be. Jenkins, it seems to me, is describing a more complicated version of this ‘lock into’ strategy, the way in which psychologists are able to solve narrowly defined, heavily regimented (‘experimental’) problem-ecologies, short of cracking open the skull and making Craverian ‘mechanism sketches.’

As Gigerenzer and the Adaptive Behaviour and Cognition Group has shown, the heuristic nature of a solution doesn’t so much count against its efficacy as against the scope of its problem ecology. The more information you neglect, the more you need to lock into the right information the right way, the more ecologically constrained your solutions tend to become. So instead of writing, “memory performance is enmeshed in a complex web of factors that need to be carefully teased apart and manipulated,” you might say, “*theorization* of memory performance is enmeshed, etc…” Experimental problem ecologies are problem ecologies where the ‘lock into’ information is carefully choreographed, sometimes so much so that the solving theory seems entirely dependent on the artifice of the lab. The reason we so consistently overlook this is simply that the information neglected is *genuinely neglected*: we have no more sense of using parochial means to solve parochial problems than we do in any other cognitive endeavour. It all feels so ‘universal’ in metacognitive retrospect. And since the language we use is the same language we use to solve ‘pick out’ problems, we assume we are picking out something that actually exists, a ‘mental function,’ as opposed to letting the ball guide our language to an intellectual catch—publication, clinical success, a laugh at Friday afternoon lectures, what have you.

And perhaps adopting a more full-blooded eliminativism becomes a far more attractive option, accordingly. Psychology is in the business of inking numbers on the clock face, not understanding what makes us tick.

One point here that I’d strongly agree with is the dependence of most of these phenomena on specific, highly contrived experimental scenarios. This point, too, is one that Hacking has emphasized, and Fred Adams and I make quite a lot out of it in Chapter 1 of our forthcoming book.

It’s equally true that we need to be careful about moving directly from context-sensitive task performance to strong conclusions about the underlying cognitive architecture. We can’t read the structure of systems or processes directly off of tasks, so these inferences need to be handled with care.

But I’m less inclined to the overall eliminativist (or instrumentalist) conclusion. Revealing the dependence of experimental effects on context shouldn’t lead us to think that they are not produced by genuine cognitive processes, or that psychologists should sacrifice any further explanatory goals and content themselves with producing a catalog or index of phenomenal variations.

Actually I think this highlights one problem with eliminativist arguments in general: they tend to act as anti-heuristics, cutting off or pre-empting further lines of investigation and directing our studies elsewhere. But given the painfully limited state of our theorizing at the moment, we should be extremely conservative about which inquiries we prune and which we allow to flourish.

For me the ‘grand’ eliminativism falls out of looking at our language as another biological means of moving as much nature with as few calories as possible. The ‘prudential’ eliminativism stems from looking at the way Machery’s book, for instance, shakes the tree. It seems pretty clear to everyone now that our tools are somehow part of the problem: a prudential eliminativism, opens up the field to possible reconceptualizations, which, as you say, will reorganize experimental resources. But since there’s no way to assess the fecundity of any given line of research in advance, it’s impossible to say that such a reorganization might not prove fruitful. But if conceptual disarray cuts against the effectiveness of research, and conservatism amounts to conserving conceptual disarray…

In a sense, prudential eliminativism simply comes down to putting everything on the table for elimination, hoping this might allow the research to drive more of the process.

Is it just me? Or is it a no-brainer that the accumulation of ever more data will inevitability reveal the granularity of our existing understanding, as well as the need to develop more nuanced vocabularies? Maybe it’s because I’m a science fiction author, used to seeing everything as ‘pre-obsolete’!

In a broad sense, everything is always “on the table” as a potential target for revision. But when it comes to eliminativist arguments, if you want to take something off the table, you have to put something else on it.

Call this the “Take one, Leave one” rule. If someone suggests eliminating a term or category, that proposal should always be accompanied by a substantive suggestion about how to reorganize the remaining theoretical terrain, where “substantive” means going beyond the act of elimination itself. It would counsel against making the quickest sorts of standalone kind-splitting arguments, but would welcome more nuanced reform proposals. Fair play makes good science.

I can see why this sounds convincing, but only on a ‘local view,’ I guess. As an SF novelist, my interest in all this turns on trying to find ways to characterize the ‘posthuman,’ to try to figure what kinds of ‘psychological constraints’ we might expect to apply to our descendents. To even begin to approach this question, you have to ask what psychological constraints consist in now. The first thing that seems apparent on this perspective is the sheer granularity of our folk apparatus, the way it pretty clearly seems to be designed to work around neglect, to do without the vast amounts of information our ancestors had no access to. FP has to radically heuristic, which is to say, a thoroughgoing ‘lock into’ cognitive system. There’s just no other way I can see it working. This means that empirical psychological exaptations of the FP toolbox are bound to be ‘lock into’ as well (or ‘instrumental,’ if you wish, though I think importing ‘use’ into the picture generates problems).

So far, I think you agree at least with the spirit of this characterization. The difference between us (and I think it’s important to get a handle on, for my sake at least!) turns on what I thought was Craver and Piccinini’s most telling observation in their (failed) attempt to reduce functional analyses to mechanism sketches: the notion that *functional analyses are now deployed in an information rich environment.* Before the rise of cognitive neuroscience these models seemed to obviously ‘pick out’ some kind of ‘mental process.’ They enjoyed what I like to call the ‘only game in town effect.’ Now, especially with the Brain Project on the horizon, they are no longer the only game in town, and everyone is wringing their hands, asking whatever they could be.

My answer is, ‘A complicated version of the gaze heuristic.’ There’s no ‘attribution,’ certainly no ‘intentional stance,’ only heuristics systems cued to various environmental triggers allowing for ‘lock into’ processing, which is to say, ways to solve complicated systems requiring almost no information from those systems to do so. Taking the ‘posthuman view,’ then, looking at the problem on a continuum of accumulating quantities and dimensions of neurocognitive information (and ability), lets you make some basic predictions that might otherwise seem precipitous (as they do to you now). One is that the parochialism of FP exaptations, their inapplicability outside very narrow problem-solving contexts, is going to become more and more evident. Another is that as this happens, it seems safe to suppose that more nuanced, more broadly applicable, intertheoretically consilient, and non-intentional tools will be knapped.

What we want, it seems to me, is a way to move beyond the limitations of our native toolkit. If eliminativism is our future, why on earth wouldn’t we embrace it now, direct our energy toward working around our evolutionary ‘workarounds’?

Thanks for these interesting posts! I’m generally in agreement with the pluralistic framework you propose, but come at it from a slightly different angle. Specifically, it seems to me that some of the mechanisms for sorting and storing information about properties are similar or identical to those used for individuals. When it comes to singular representations, there is also plurality of ways for storing information about a particular object, but few would accept that our representations of individuals don’t form a common psychological kind. So my resistance to eliminativism stems from the thought the the plurality of representational capacities we might use to capture information about a category will, except in some special cases, be tied together by mechanisms for sustaining reference. Others have made related points about the similarity between singular reference and the reference of natural kind concepts, but my suspicion is that the similarities hold much more broadly, and that recent efforts by people like Recanati to advance a mental files framework (or Dickie’s luck-elimination framework) for singular reference can be extended to concepts more generally. Do you have any thoughts about this?

Great question. I’ve also made similar use of the notion of a mental file in a 2009 PPR paper on atomism and pluralism. I didn’t specifically note the link with work on individual concepts and the mechanisms that sustain singular reference, but it was hovering in the background of what I was thinking at the time.

Some sort of mental file architecture is needed here in any case, since there needs to be a way to mark all of these co-referring concepts as ones that track the same substance or property or individual. This is a point that comes out of both Recanati’s work and Millikan’s (though I don’t think that it implies that the majority of our nominal concepts are substance concepts). My own emphasis is more on the internal processes of keeping information integrated and accessible–recognizing samenesses and differences in thought–rather than on sustaining external links of reference.

As a side note, I’m hoping to write something up soon that tries to ground the mental file metaphor in empirical data about the organization of long-term semantic memory. (It isn’t a coincidence that contemporary work on categorization evolved out of earlier debates concerning semantic memory.) In particular, I think that the literature on category-specific deficits is a productive starting point for thinking about how this filing system might work. Philosophical appeals to mental files tend to be sketchy at best when it comes to discussing such architectural issues, but doing so is crucial for moving the construct beyond the realm of metaphor.